In my August Physics World article, The future of nanotechnology, I argued that fears of the loss of control of self-replicating nanobots – resulting in a plague of grey goo – were unrealistic, because it was unlikely that we would be able to “out-engineer evolution”. This provoked this interesting response from a reader, reproduced here with his permission:

Dr. Jones,

I am a graduate student at MIT writing an article about the work of Angela Belcher, a professor here who is coaxing viruses to assemble transistors. I read your article in Physics World, and thought the way you stated the issue as a question of whether we can “out-engineer evolution” clarified current debates about the dangers of nanotechnology. In fact, the article I am writing frames the debate in your terms.

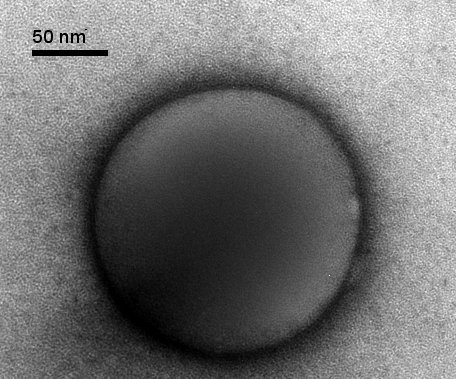

I was wondering whether Belcher’s work might change the debate somewhat. She actually combines evolution and engineering. She directs the evolution of peptides, starting with a peptide library, until she obtains peptides that cling to semiconductor materials or gold. Then she genetically engineers the viruses to express these peptides so that, when exposed to semiconductor precursors, they coat themselves with semiconductor material, forming a single crystal around a long, cylindrical capsid. She also has peptides expressed at the ends that attach to gold electrodes. The combination of the semiconducting wire and electrodes forms a transistor.

Now her viruses are clearly not dangerous. They require a host to replicate, and they can’t replicate once they’ve been exposed to the semiconducting materials or electrodes. They cannot lead to “gray goo.”

Does her method, however, suggest the possibility that we can produce things we could never engineer? Might this lead to molecular machines that could actually compete in the environment?

Any help you could provide in my thinking through this will be appreciated.

Thank you,

Kevin Bullis

Here’s my reply:

Dear Kevin,

You raise an interesting point. I’m familiar with Angela Belcher’s work, which is extremely elegant and important. I touch a little bit on this approach, in which evolution is used in a synthetic setting as a design tool, in my book “Soft Machines”. At the molecular level the use of some kind of evolutionary approach, whether executed at a physical level, as in Belcher’s work, or in computer simulation, seems to me to be unavoidable if we’re going to be able to exploit phenomena like self-assembly to the full.

But I still don’t think it fundamentally changes the terms of the debate. I think there are two separate issues:

1. is cell biology close to optimally engineered for the environment of the (warm, wet) nanoworld?

2. how can we best use design principles learnt from biology to make useful synthetic nanostructures and devices?

In this context, evolution is an immensely powerful design method, and it’s in keeping with the second point that we need to learn to use it. But even though using it might help us approach biological levels of optimality, one can still argue that it won’t help us surpass it.

Another important point revolves around the question of what is being optimised, or in Darwinian terms, what constitutes “fitness”. In our own nano-engineering, we have the ability to specify what is being optimised, that is, what constitutes “fitness”. In Belcher’s work, for example, the “fittest” species might be the one that binds most strongly to a particular semiconductor surface. This is quite different as a measure of fitness than the ability to compete with bacteria in the environment, and what is optimal for our own engineering purposes is unlikely to be optimal for the task of competing in the environment.

Best wishes,

Richard

To which Kevin responded:

Richard,

It does seem likely that engineering fitness would not lead to environmental fitness. Belcher’s viruses, for example, would seem to have

a hard time in the real world, especially once coated in a semiconductor crystal. What if, however, someone made environmental fitness a goal? This does not seem unimaginable. Here at MIT engineers have designed sensors for the military that provide real-time data about the environment. Perhaps someday the military will want devices that can survive and multiply. (The military is always good for a scare. Where would science fiction be without thoughtless generals?)

This leads to the question of whether cells have an optimal design, one that can’t be beat. It may be that such military sensors will not be able to compete. Belcher’s early work had to do with abalone, which evolved a way to transform chalk into a protective lining of nacre. Its access to chalk made an adaptation possible that, presumably, gave it a competitive advantage. Might exposure to novel environments give organisms new tools for competing? I think now also of invasive species overwhelming existing ones. These examples, I realize, do not approach gray goo. As far as I know we’ve nothing to fear from abalone. Might they suggest, however, that

novel cellular mechanisms or materials could be more efficient?

Kevin

To which I replied:

Kevin,

It’s an important step forward to say that this isn’t going to happen by accident, but as you say, this does leave the possibility of someone doing it on purpose (careless generals, mad scientists…). I don’t think one can rule this out, but I think our experience says that for every environment we’ve found on earth (from what we think of as benign, e.g. temperate climates on the earth’s surface, to ones that we think of as very hostile, e.g. hot springs and undersea volcanic vents) there’s some organism that seems very well suited for it (and which doesn’t work so well elsewhere). Does this mean that such lifeforms are always absolutely optimal? A difficult question. But moving back towards practicality, we are so far from understanding how life works at the mechanistic level that would be needed to build a substitute from scratch, that this is a remote question. It’s certainly much less frightening than the very real possibility of danger from modifying existing life-forms, for example by increasing the virulence of pathogens.

Best wishes,

Richard