Moore’s Law – and the technology it describes, the integrated circuit – has been one of the defining features of the past half century. The idea of Moore’s law has been invoked in three related senses. In its original form, it was rather a precise prediction about the rate of increase of the number of transistors to be fitted on a single integrated circuit. It’s never been a law – it’s been more of an organising principle for an industry and its supply chain – and thus a self-fulfilling prophecy. In this sense, it’s been roughly true for fifty years – but is now bumping up against physical limits.

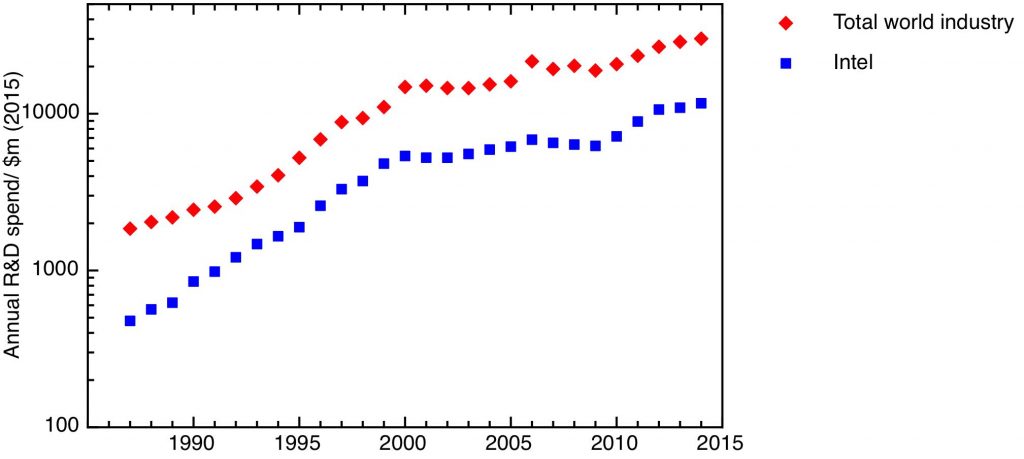

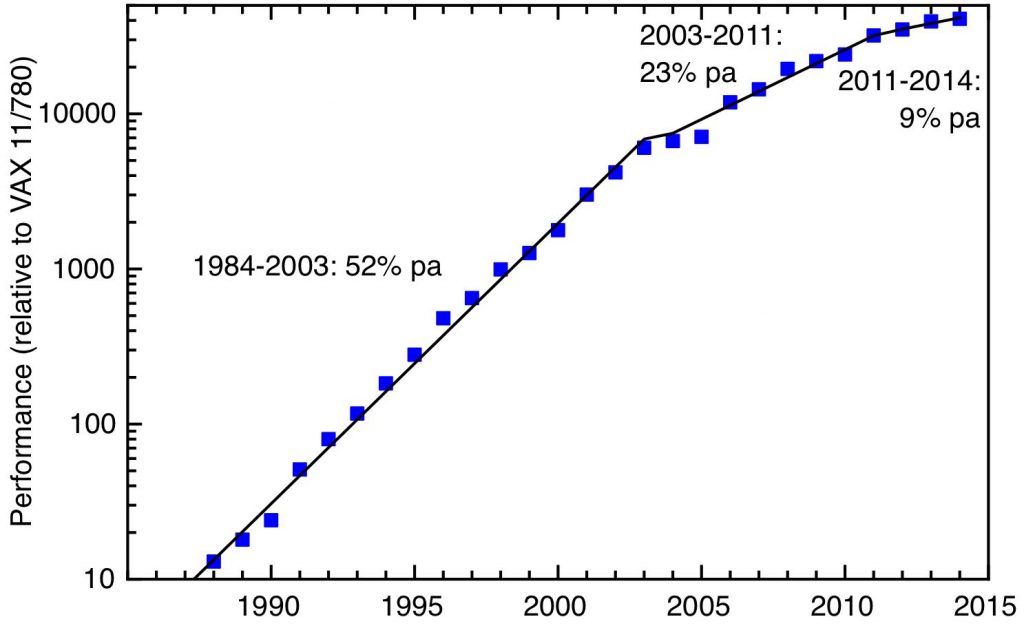

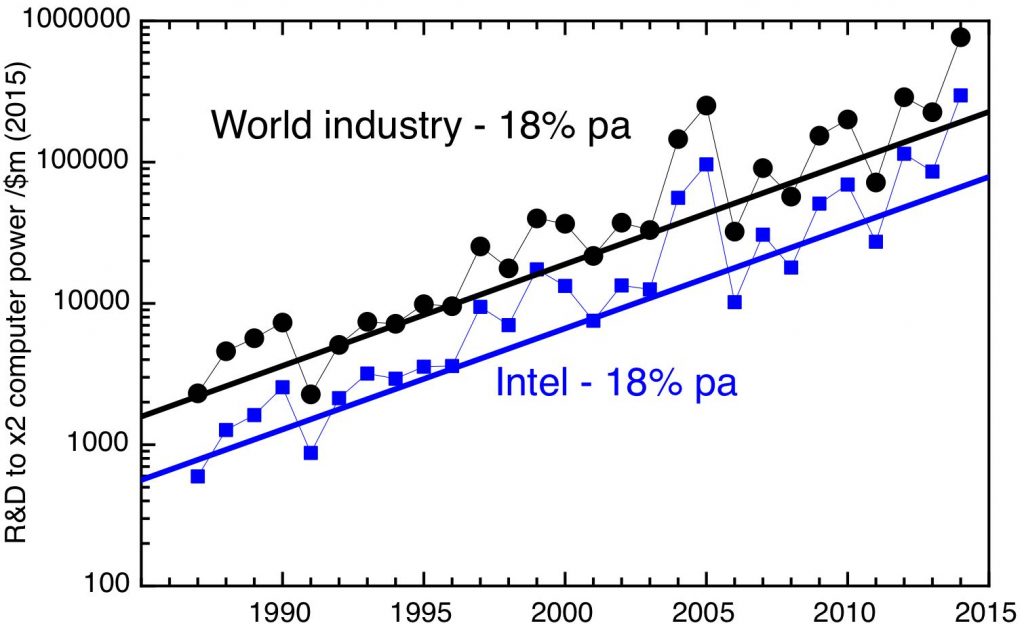

In the second sense, Moore’s law is used more loosely as a statement about the increase in computing power, and the reduction of its cost, over time. The assertion is that computing power grows exponentially. This also was true, for a while. From the mid 1980’s to the mid 2000’s, computer power grew at a rate of 50% a year compounded, doubling every two years. In this extraordinary period, there was more than a thousandfold cumulative increase over a couple of decades.

The rate of increase in raw computer power has slowed substantially over the last two decades, following the end of Dennard scaling and the limitations of heat dissipation, but this has been counteracted to some extent by software improvements and the development of architectures specialised for particular applications. For example, the Graphics Processing Units – GPUs – that have emerged as being so important for AI are highly optimised for multiplying large matrices.

In the third sense, Moore’s Law is used as a synecdoche for the more general idea of accelerating change, that the pace of change in technology in general is exponential – or even super-exponential – in character. This of course is a commonplace in airport business books. It underpins the idea of a forthcoming singularity, as a received wisdom in Silicon Valley. The idea of the singularity has been given more salience by the recent rapid progress in artificial intelligence, and the widespread view that superhuman artificial general intelligence will soon be upon us.

In this post, I want to go back to the fundamentals – how much the basic components of computing can be shrunk in size, and what the prospects for future miniaturisation are. But this does directly bear on the question of the prospects increase in computer power, which has taken on new importance, as the material basis of the AI boom. AI has brought us to a new situation; in the classical period of fastest growth of computer power (the 80s and 90s) the supply of computing power was growing exponentially, and the opportunity was to find ways of using that power. Now, with AI, it’s the demand for computing power that is growing exponentially, and the issue is whether supply can match that demand.

Moore’s Law. From Max Roser, Hannah Ritchie, and Edouard Mathieu (2023) – “What is Moore’s Law?” Published online at OurWorldinData.org. Retrieved from: ‘https://ourworldindata.org/moores-law‘ [Online Resource]. Licensed under CC-BY.

A classical depiction of Moore’s law is shown in this plot from Our World in Data – with a logarithmic y-axis, a straight line indicates an exponential growth in the number of transistors in successive generations of microprocessor. The seemingly inexorable upward progress of the line conceals a huge amount of innovation; each upward step was facilitated by research and development of new materials and new processes. It also conceals some significant discontinuities.

For example, the earlier relationship between computer power and number of transistors was broken in the mid-2000s. Before then miniaturisation brought a double benefit – it gave you more transistors on each chip, and in addition each transistor worked faster, because it was smaller. The latter relation – Dennard scaling – broke down, because heat dissipation became a limiting factor

Another fundamental change happened in 2012. The fundamental unit of the modern integrated circuit is the metal oxide silicon field effect transistor – the mosFET. This consists channel of doped silicon, with contacts at either end. The channel is coated with a thin, insulating layer of oxide, on top of which is a metal electrode – the gate. It is the gate which controls the flow of electrical current through the channel. When physical limits meant that the planar mosFET couldn’t be shrunk any more, a new design flipped the channel into the vertical plane, so the transistors took the form of fins standing up from the plane of the silicon chip. Each side of the doped silicon fin is coated by insulating oxide and a metal gate, to form the finFET.

The patterns that make the circuits in integrated circuits are made by lithography – light is shone through a patterned mask onto a photoresist, which is subsequently developed to make the pattern physical. The lower limit on the size of the features that can be patterned in this way is ultimately set by the wavelength of light used. Through the 2010’s, lithography was based on using deep ultraviolet light created by excimer lasers – with a 193 nm wavelength. By 2020, this technique had been squeezed as far as it would go, and the 5 nm process node uses extreme UV, with a wavelength of 13.5 nm. The Dutch company ASML has a monopoly on the tools to produce EUV for lithography, each of which costs more than $100 million; the radiation is created in a metal plasma, and has to be focused entirely by mirrors.

I’ve referred to the 2020 iteration of fabrication technology as the “5 nm process”, following a long-standing industry convention of characterising successive technology generations through a single length. In the days of the planar mosFET, a single parameter characterised the size of each transistor – the gate length. There was a stable relationship between the gate length and the length characterising the node number, and there was a roughly biennial decrease in the node number, from the 1982 1.5µm process that drove the explosion of personal computers, to the 2002 90 nm process of the Pentium 4. But with the replacement of the mosFET by the finFET, circuit geometry changed and the relationship between the node size and actual dimensions of the circuit broke down. In fact, the node size now is best thought of as entirely a marketing device, on the principle that the smaller the number the better.

A better way to describe progress in the scaling down of the size uses an estimate of the minimum possible area for a transistor as the product of the metal pitch, the minimum distance between horizontal interconnects, and the contacted gate pitch, the distance from one transistor’s gate to another’s.

Minimum transistor footprint (product of metal pitch and contacted gate pitch) for successive semiconductor process nodes. Data: (1994 – 2014 inclusive) – Stanford Nanoelectronics Lab, post 2017 and projections, successive editions of the IEEE International Roadmap for Devices and Systems

My plot shows the minimum transistor footprint, calculated in this way, for each process node since 1994 (the 350 nm node). The first five nodes – until 2002 – track the exponential increase in density expected from Moore’s law – the fit represents a transistor density that doubles every 2.2 years. The last three generations of planar mosFET technology – until 2009 – show a slight easing of the pace. The switch to the finFET prolonged the trend for another decade or so. But it’s clear now that the “2 nm” node, being introduced by TSMC this year, confirms a marked levelling off of the pace of miniaturisation. For this node, there has been another change of geometry – finFETs have been replaced by vertical rows of nanowires, each completely surrounded by the metal of the gate electrode – GAA, for “gate all around”.

It has to be stressed that miniaturisation of transistors is far from the only way in which computer power can be increased. A good illustration of this comes from progress in making the ultra-powerful chips that have driven the current AI boom, such as Nvidia’s H100. The H100 itself was actually fabricated by TSMC on the “5 nm” node, the first to use AMSL’s EUV light source for lithography. But, as this article explains, only a fraction of the performance improvements of the H100 over previous generations are attributed to Moore’s law. Much of the improvement comes from more efficient ways of representing numbers and carrying out the arithmetic operations that underlie artificial intelligence.

Another factor of growing importance is in the way individual silicon chips are packaged. Many modern integrated circuits, including the H100, are not a single chip. Instead several individual chips, including both logic and memory, are mounted together on a silicon substrate, with fast interconnects to join them all up. The H100 relies on an TSMC advanced packaging technology known as “Chip on Wafer on Substrate” (CoWoS), and is an example of a “System in Package”.

What does the future hold? The latest (2023) iteration of the IEEE’s International Roadmap for Devices and Systems foresees one more iteration of the Gate All Around architecture. The 2031 node is a refinement of that which stacks two mosFETs on top of each other, one with a p-doped channel, one with an n-doped channel (this combination of p- and n- doped FETs is the fundamental unit of logic gates in CMOS technology – “complementary metal oxide silicon”, hence this is referred to as CFET). This essentially doubles the transistor density. After this, no further shrinking in dimensions is envisaged, so further increases in transistor density are to be obtained by stacking multiple tiers of circuits vertically on the wafer.

So what’s the status of Moore’s law now? I return to the 3 senses in which people talk about Moore’s law – as a technical prediction about the growth in the number of transistors on an integrated circuit, as a more general statement about increasing computer power, and as a shorthand for talking about accelerating technical change in general.

In the first, and strictest, sense, we can be definitive – Moore’s law has run its course. The rate of increase in transistor density has significantly slowed since 2020, and exponential growth with an increasing time constant isn’t exponential any more. The technology in its current form has now begun to hit limits, both physical and economic.

For the second, looser, sense, things are more arguable. Available computing power is still increasing, and we see the outcomes of that in advances such as the development of large language models. But this increased power is coming, less from miniaturisation, more from software, specialised architectures optimised for particular tasks, and advanced packaging of chips in “Systems in Package”. It’s this transition that underlies the fact that Nvidia is worth more as a company than TSMC, even though it’s TSMC that actually manufactures (and packages) the chips.

But I wonder whether these approaches will be subject to diminishing returns, in contrast with the classical period of Moore’s law, when constant, large, fractional returns were repeated year after year for decades, producing orders of magnitude cumulative improvements. We are also seeing as a major source of increasing computer power the brute-force approach of just buying more and more chips, in huge, energy consuming data centres. These kind of increases in computer power are fundamentally linear, rather than exponential, in character, and yet they are trying to meet a demand – largely from AI – which is growing exponentially.

It’s very tempting to take Moore’s law as an emblem of the idea that technological change in general is accelerating exponentially, but I think this is unhelpful. Technology isn’t a single thing that improves at a given rate; there are many technologies, and at a given time some will be accelerating, some will be stagnating, some may even be regressing. As we have seen before, the exponential improvement of a single technology never continues forever; physical or economic limits show up, and growth saturates. Continuous progress needs the continuous introduction of new technologies which can take up the baton of growth from those older technologies, whose growth is stalling.

It should be stressed here that when we talk about the end of Moore’s law, the technology that we are talking about isn’t computing in general – it is this particular way of implementing machine logic, CMOS (complementary metal oxide semiconductor). There are many ways in which we can imagine doing computing – the paradox here is that CMOS has been so successful that it has crowded out alternative approaches, some of which might have significant advantages. For example, we know that CMOS logic uses several orders of magnitude more energy per operation than the theoretical minimum (the Landauer limit).

Finally, it does bear repeating what an extraordinary period the heyday of Moore’s law and Dennard scaling was, with computer power doubling every two years, sustained over a couple of decades to produce a cumulative thousand-fold increase. For those who have lived through that period, it will be difficult to resist the belief that this rate of technological progress is part of the natural order of things.