This piece was written in response to an invitation from the management consultants McKinsey to contribute to a forthcoming publication discussing the potential impacts of biotechnology in the coming century. This is the unedited version, which is quite a lot longer than the version that will be published.

The discovery of an alien form of life would be discovery of the century, with profound scientific and philosophical implications. Within the next fifty years, there’s a serious chance that we’ll make this discovery, not by finding life on a distant planet or indeed by such aliens visiting us on earth, but by creating this new form of life ourselves. This will be the logical conclusion of using the developing tools of nanotechnology to develop a “bottom-up” version of synthetic biology, which instead of rearranging and redesigning the existing components of “normal” biology, as currently popular visions of synthetic biology propose, uses the inspiration of biology to synthesise entirely novel systems.

Life on earth is characterised by a stupendous variety of external forms and ways of life. To us, it’s the differences between mammals like us and insects, trees and fungi that seem most obvious, while there’s a vast variety of other unfamiliar and invisible organisms that are outside our everyday experience. Yet, underneath all this variety there’s a common set of components that underlies all biology. There’s a common genetic code, based on the molecule DNA, and in the nanoscale machinery that underlies the operation of life, based on proteins, there are remarkable continuities between organisms that on the surface seem utterly different. That all life is based on the same type of molecular biology – with information stored in DNA, transcribed through RNA to be materialised in the form of machines and enzymes made out of proteins – reflects the fact that all the life we know about has evolved from a common ancestor. Alien life is a staple of science fiction, of course, and people have speculated for many years that if life evolved elsewhere it might well be based on an entirely different set of basic components. Do developments of nanotechnology and synthetic biology mean that we can go beyond speculation to experiment?

Certainly, the emerging discipline of synthetic biology is currently attracting excitement and foreboding in equal measure. It’s important to realise, though, that in the most extensively promoted visions of synthetic biology now, what’s proposed isn’t making entirely new kinds of life. Rather than aiming to make a new type of wholly synthetic alien life, what is proposed is to radically re-engineer existing life forms. In one vision, it is proposed to identify in living systems independent parts or modules, that could be reassembled to achieve new, radically modified organisms that can deliver some desired outcome, for example synthesising a particularly complicated molecule. In one important example of this approach, researchers at Lawrence Berkeley National Laboratory developed a strain of E. coli that synthesises a precursor to artmesinin, a potent (and expensive) anti-malarial drug. In a sense, this field is a reaction to the discovery that genetic modification of organisms is more difficult than previously thought; rather than being able to get what one wants from an organism by altering a single gene, one often needs to re-engineer entire regulatory and signalling pathways. In these complex processes, protein molecules – enzymes – essentially function as molecular switches, which respond to the presence of other molecules by initiating further chemical changes. It’s become commonplace to make analogies between these complex chemical networks and electronic circuits, and in this analogy this kind of synthetic biology can be thought of as the wholesale rewiring of the (biochemical) circuits which control the operation of an organism. The well-publicised proposals of Craig Venter are even more radical – their project is to create a single-celled organism that has been slimmed down to have only the minimal functions consistent with life, and then to replace its genetic material with a new, entirely artificial, genome created in the lab from synthetic DNA. The analogy used here is that one is “rebooting” the cell with a new “operating system”. Dramatic as this proposal sounds, though, the artificial life-form that would be created would still be based on the same biochemical components as natural life. It might be synthetic life, but it’s not alien.

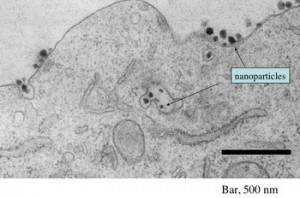

So what would it take to make a synthetic life-form that was truly alien? In principle, it seems difficult to argue that this wouldn’t be possible in principle – as we learn more about the details of the way cell biology works, we can see that it is intricate and marvellous, but in no sense miraculous – it’s based on machinery that operates on principles consistent with the way we know physical laws operate on the nano-scale. These principles, it should be said, are very different to the ones that underlie the sorts of engineering we are used to on the macro-scale; nanotechnologists have a huge amount to learn from biology. But we are already seeing very crude examples of synthetic nanostructures and devices that use some of the design principles of biology – designed molecules that self-assemble to make molecular bags that resemble cell membranes; pores that open and close to let molecules in and out of these enclosures, molecules that recognise other molecules and respond by changes in shape. It’s quite conceivable to imagine these components being improved and integrated into systems. One could imagine a proto-cell, with pores controlling traffic of molecules in and out of it, containing an network of molecules and machines that together added up to a metabolism, taking in energy and chemicals from the environment and using them to make the components needed for the system to maintain itself, grow and perhaps reproduce.

Would such a proto-cell truly constitute an artificial alien-life form? The answer to this question, of course, depends on how we define life. But experimental progress in this direction will itself help answer this thorny question, or at least allow us to pose it more precisely. The fundamental problem we have when trying to talk about the properties of life in general, is that we only know about a single example. Only when we have some examples of alien life will it be possible to talk about the general laws, not of biology, but of all possible biologies. The quest to make artificial alien life will teach us much about the origins of our kind of life. Experimental research into the origins of life consists of an attempt to rerun the origins of our kind of life in the early history of earth, and is in effect an attempt to create artificial alien life from those molecules that can plausibly be argued to have been present on the early earth. Using nanotechnology to make a functioning proto-cell should be an easier task than this, as we don’t have to restrict ourselves to the kinds of materials that were naturally occurring on the early earth.

Creating artificial alien life would be a breathtaking piece of science, but it’s natural to ask whether it would have any practical use. The selling point of the most currently popular visions of synthetic biology is that they will permit us to do difficult chemical transformations in much more effective ways – making hydrogen from sunlight and water, for example, or making complex molecules for pharmaceutical uses. Conventional life, including the modifications proposed by synthetic biology, operates only in a restricted range of environments, so it’s possible to imagine that one could make a type of alien life that operated in quite different environments – at high temperatures, in liquid metals, for example – opening up entirely different types of chemistry. These utilitarian considerations, though, pale in comparison to what would be implied more broadly if we made a technology that had a life of its own.