On October 27th, the UK government set out its spending plans for the next three years, in a Comprehensive Spending Review. Here I look at what this implies for the research and development budget.

The overall increase in the government’s R&D budget is real and substantial, though the £22 billion spending target has been postponed two years

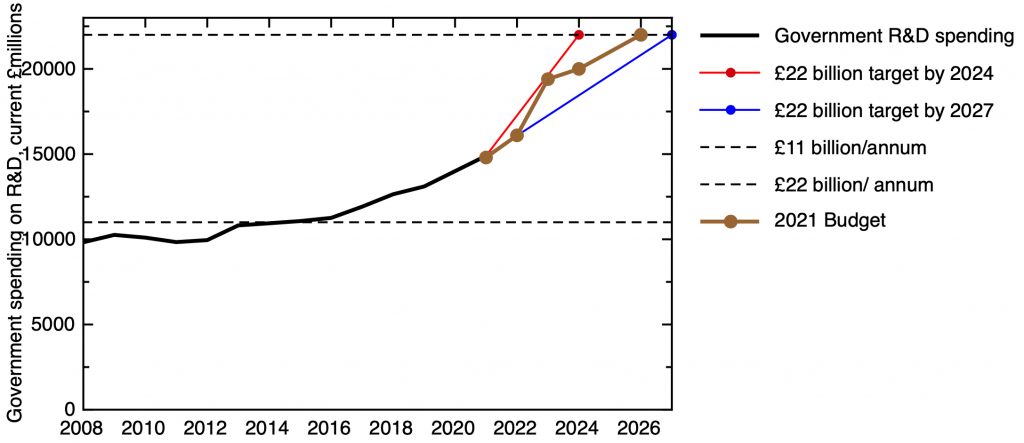

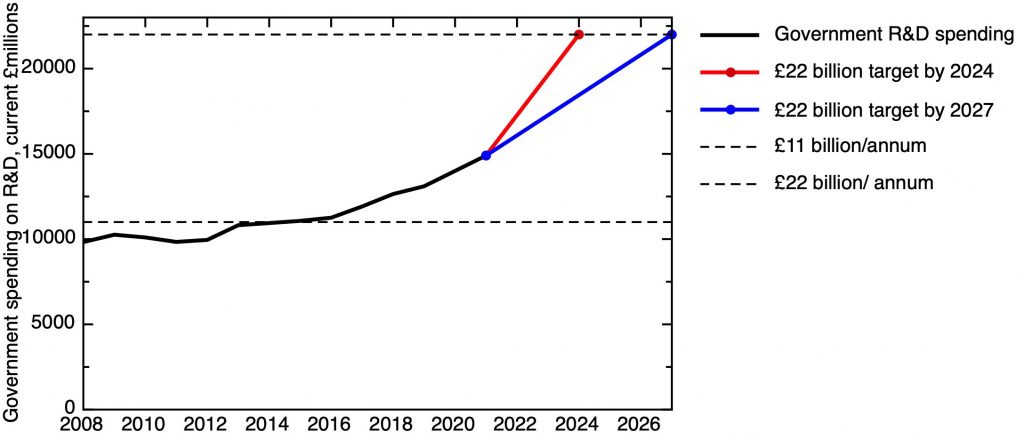

The headline result is that real, substantial increases in government R&D spending have been announced. The background to this is a promise made in the March 2020 budget that by 2024/25, government R&D spending would rise to £22 billion a year. Of course, after the pandemic, the UK’s fiscal situation is now much worse, so it isn’t surprising that the date for reaching this figure has been set back a couple of years.

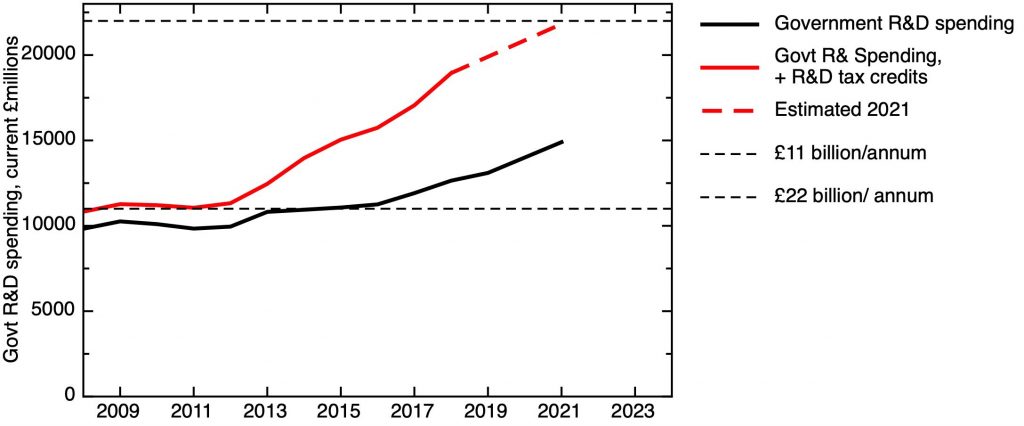

I wrote earlier about the suspicions in some policy circles that the government might attempt to game the figures to claim that the target had been reached – On the £22 billion target for UK government R&D

I’m glad the government hasn’t done this, and instead has set out a path which does deliver real, and substantial increases in R&D spending.

This is made clear in my first figure, which shows the trajectory of R&D spending since 2008. The Comprehensive spending review delivers an increase next year very much in line with increases in previous years, but the increase from 2022/23 to 2023/4 is very substantial – and in fact would put R&D spending on the trajectory that would be needed to achieve the £22 billion target by the original date of 24/25. I understand that the slower increases after that date are connected with some technicalities about the path of the UK’s contributions to the EU Horizon R&D programme, but more on that below.

Comprehensive Spending Review commitments for total government spending on R&D, in current money, compared with historical actual spending. Sources: ONS, October 2021 HMT Red Book.

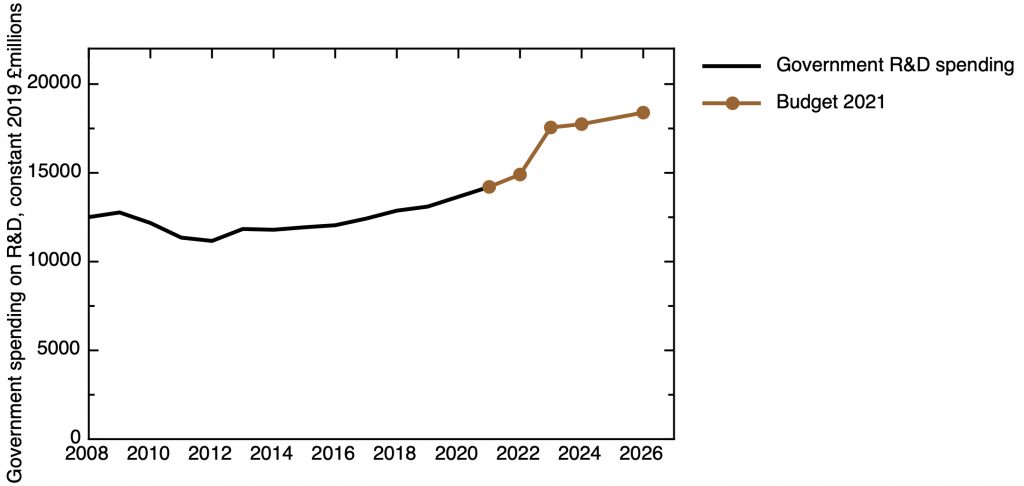

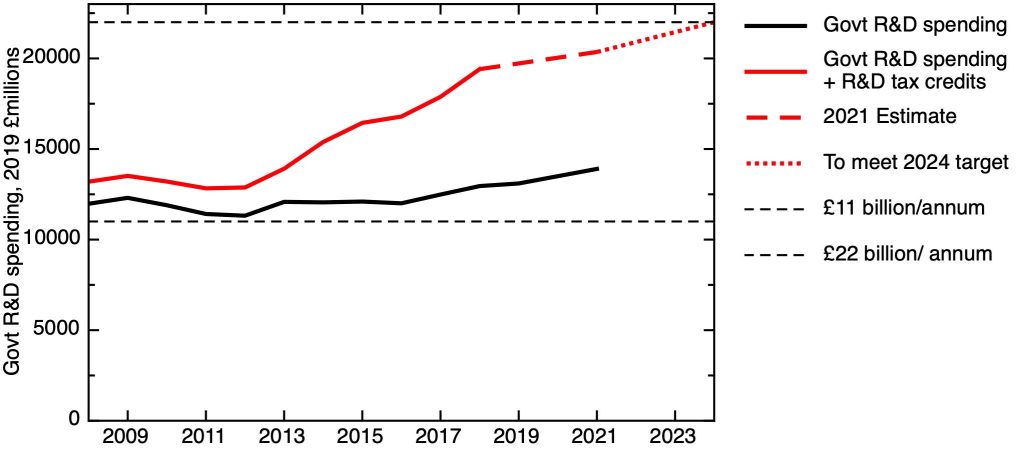

Of course, these figures are uncorrected for inflation – and given that we’re currently seeing a rate of price increases higher than we’ve seen for some time, this is likely to make a big difference. I’ve attempted to show the effect of that in the next plot, which shows both the historical and projected R&D spending expressed in constant 2019 £s. I’ve made the correction using the Consumer Price Index, and used the OBR’s latest central prediction of future CPI inflation (of course, this may turn out to be optimistic) to deflate planned future R&D spending.

This plot shows that R&D spending stagnated in the early 2010’s, with a gently increasing trend beginning in 2015. In real terms R&D spending only recovered beyond the previous 2009 peak in 2018. The increase from 2022/23 to 2023/4, even after the inflation correction, is significantly greater than anything we’ve seen in the last decade.

If we look ahead to 2026/7, by when the £22 bn nominal total is planned to be reached, we can expect the real value of this sum to have been significantly eroded by inflation. On this, rather uncertain, projection, we project a 47% real increase on the 2009 previous peak.

Comprehensive Spending Review commitments for total government spending on R&D, correct for inflation by CPI, compared with historical actual spending. Sources: ONS, October 2021 HMT Red Book, OBR Economic and Fiscal Outlook October 2021 (future CPI predictions).

All areas of R&D spending see real increases, but there’s slight shift in balance to applied and department research

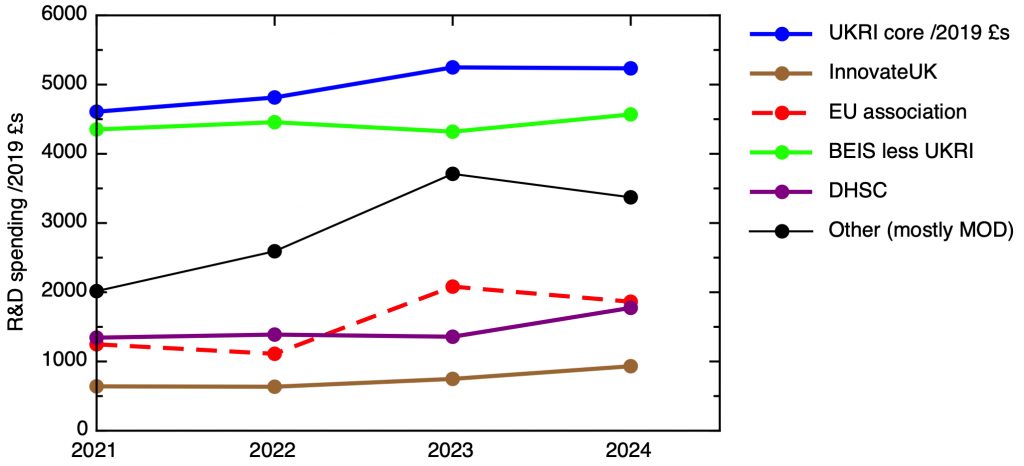

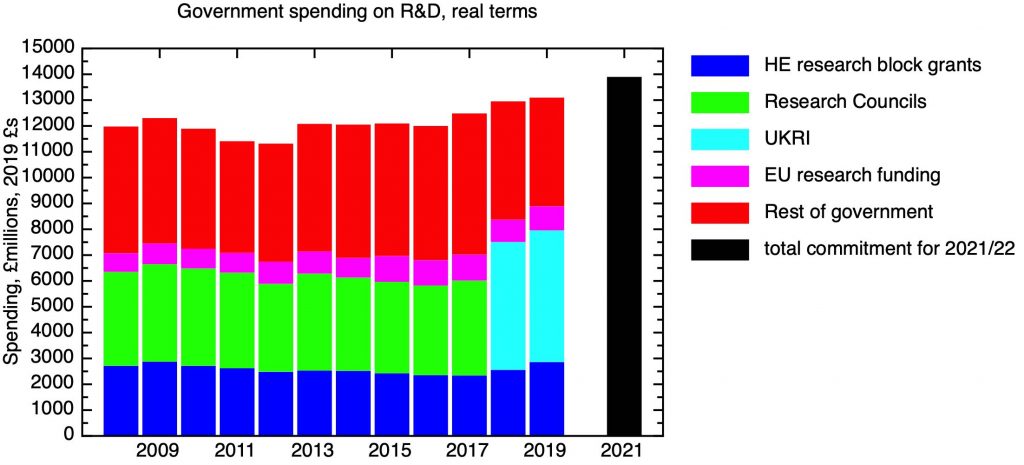

Even though most attention falls on the R&D money the government spends in universities through research councils and research block grants, this actually forms a minority of the government’s total R&D spending. Other departments – notably the Department of Health and Social Care and the Ministry of Defence – have significant R&D budgets focused on more applied research connected to their core goals. The Department of Business, Energy and Industrial Strategy holds the overall budget for the UK’s main funding agency, UK Research and Innovation, but this also includes the innovation agency Innovate UK, which has a more business focus, and various pots of money directed at mission-orientated research, most notably the Industrial Strategy Challenge Fund.

This is illustrated in my next plot, showing planned R&D spending in real terms. This shows a gentle but significant rise in what the government now calls the UKRI core research budget – mainstream research supported by the research councils and national academies, and the block grant distributed to universities by Research England and corresponding agencies in the devolved nations. The innovation agency Innovate UK is part of UKRI, but has been helpfully broken out in the figures; although this is a relatively small part of the overall picture, as we’ll see this will see substantial increases.

What isn’t clear to me, at the moment, is how the R&D spending currently ascribed to the rest of BEIS will be allocated. I believe this includes funds currently distributed by UKRI, but not included in the “core research” line, which would include the Industrial Strategy Challenge Fund and the Strength in Places fund. It also includes various public/private collaborations like the Aerospace Technology Initiative. We await details of how these funds will be allocated; as the plot makes clear, this is a very substantial fraction of the total.

The other uncertainty surrounds the cost of associating to the EU’s research programmes, particularly Horizon 2020. The UK’s withdrawal agreement with the EU agreed to associate with the EU’s R&D programmes, as widely supported by the UK’s scientific community, the UK government, and our partners in Europe. But the final agreement with the EU has not yet been signed by the Commission, who are linking its finalisation with all the other outstanding issues in dispute between the UK and the EU, notably around the Northern Ireland protocol.

I understand that the commitment has been made that, if association with Horizon is stymied by these entirely unrelated problems, the full amount budgeted for the Horizon association fee will be made available to support R&D in other ways. It’s no secret that some in government would prefer this outcome, and the significant scale of the funds involved may tempt some in the scientific community to support such alternative arrangements, though it may be worth reflecting on the likely solidity of that commitment, as well as stressing the non-monetary value of the international collaborations that Horizon opens up.

Breakdown of Comprehensive Spending Review commitments for government spending on R&D by department/category, corrected for inflation. Source: October 2021 HMT Red Book, OBR Economic and Fiscal Outlook October 2021 (future CPI predictions).

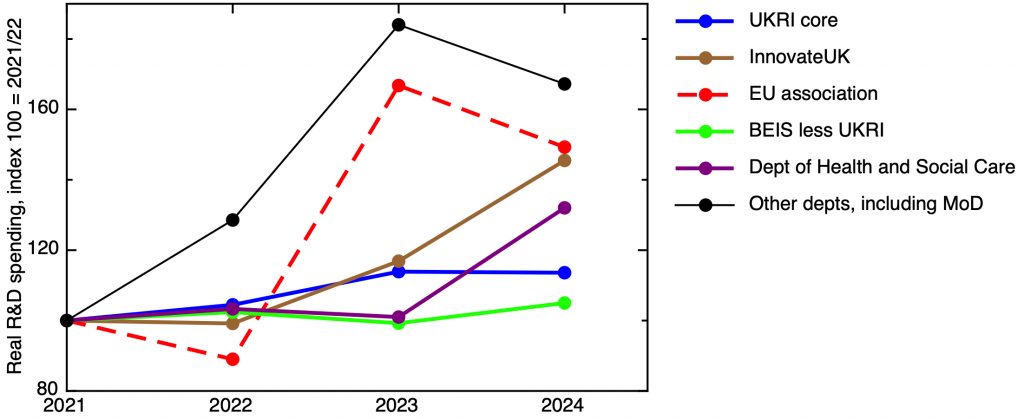

The relative scale of the increases becomes more clear in my next plot, where I’ve plotted the real terms increase relative to the 2021/22 starting point. This emphasises that in relative terms, the big winners from the budget uplift are the other departments – dominated by the Ministry of Defense – and the innovation agency Innovate UK. The Department of Health and Social Care – which holds the budget of the National Institute for Health Research – also does well.

The increase in the UKRI core research line is real, but significantly smaller. This does indicate a shift in emphasis to applied research and development, including R&D in support of other government priorities. However, one shouldn’t overstate this – there’s a lot of inertia in the system and the UKRI core research budget is still a very large proportion of the total. As a fraction of total government R&D, UKRI core research is planned to fall slightly from 32% of the total to a bit less than 30%.

Increases in spending on R&D by department/category, corrected for inflation, relative to 2021/22 value (index=100). Source: October 2021 HMT Red Book, OBR Economic and Fiscal Outlook October 2021 (future CPI predictions).

R&D spending, place, and the “levelling up” agenda

Government R&D spending in the UK is currently highly concentrated in those parts of the country that are already most prosperous – the Greater South East, comprising London, the Southeast and East of England. In our NESTA paper “The Missing Four Billion, making R&D work for the whole UK”, Tom Forth and I documented this imbalance. For example, London, together with the two subregions containing Oxford and Cambridge, account for 46% of all public and charitable spending on R&D, with 21% of the UK’s population.

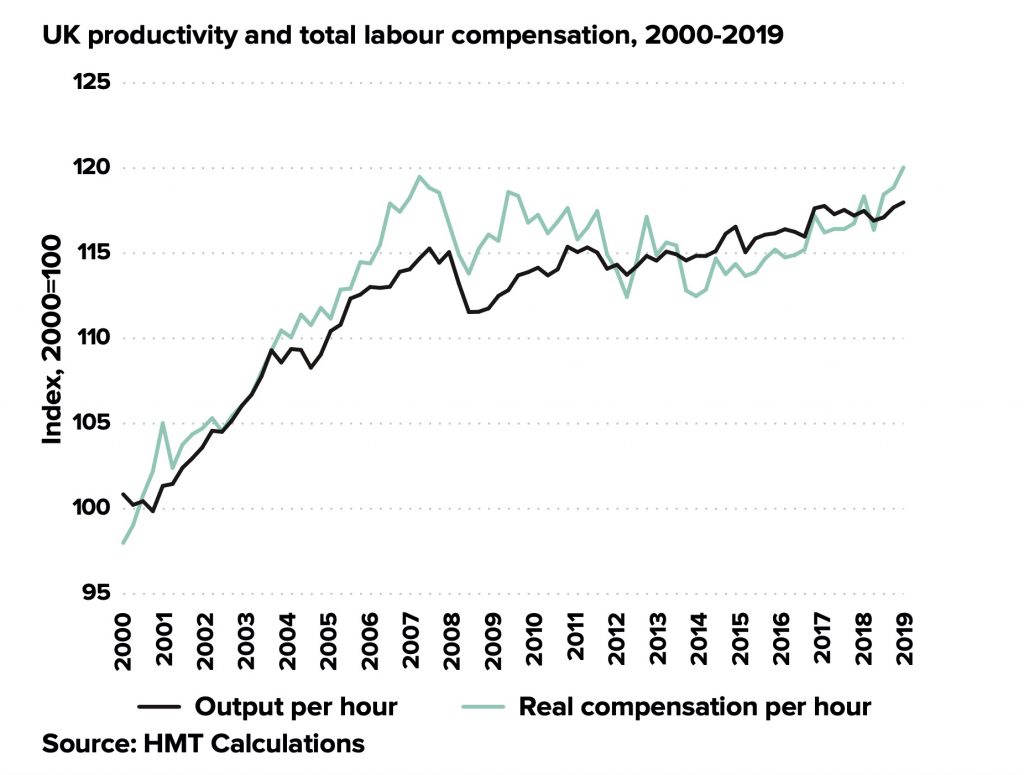

A large part of the focus of the government’s “levelling up” agenda should be on the problem of transforming the economies of those parts of the country where productivity lags. Government spending on R&D should contribute to this, by supporting private sector innovation and skills. Currently, though, the UK’s R&D imbalances work in the opposite direction.

The Comprehensive Spending Review makes a welcome recognition of this issue, stating in paragraph 3.7:

“SR21 invests a record £20 billion by 2024-25 in Research and Development (R&D). The government will ensure that an increased share of the record increase in government spending on R&D over the SR21 period is invested outside the Greater South East, and will set out the plan for doing this in the forthcoming Levelling Up White Paper. The investment will build on the support provided throughout the UK via current programmes such as the Strength in Places Fund and the Catapult network.”

This is an important commitment. It is the substantial real increase in R&D spending that gives us the opportunity, for the first time for many decades, to have the prospect of raising the R&D intensity of the UK’s economically lagging cities and regions without compromising the existing scientific excellence found in places like Oxford and Cambridge.

How much money should be involved? The £20 billion R&D spend planned for 2024/25 represents a £5 billion increase from the current year; the business-as-usual split would suggest a bit more than half of that increase would go to the Greater South East. So, to ensure that an “increased share” goes outside the greater South East, we should be aiming for an uplift of order £3.5 – £4 billion. As the title of our paper suggests, that is a sum on a scale that could make a material difference.

How should the money be spent in a way that delivers the outcomes we want – more productive regional economies, leading to more prosperous and flourishing communities? Paragraph 3.7 mentions two existing programmes – “Strength in Places” and the Catapult Centres. I think these are good programmes that can be built on, but they are not likely to be sufficient in scale by themselves, so more will be needed. Here are some concrete suggestions:

We await further details of the government’s proposals in the forthcoming Levelling Up White Paper. In my view, to achieve the government’s goals, we need to reconsider how decisions are made about R&D spending. Good decisions about the kinds of R&D support that work with the grain of existing local and regional economies need local knowledge of the kind that it is unreasonable to expect policy makers based centrally in Whitehall to have.

Instead, I hope that the Levelling Up White Paper will create structures through which policy makers in central government and its agencies can work with more local and regional organisations in “Innovation Deals”, to co-create and research priorities that are both appropriate for specific places but also contribute in a joined-up way to national priorities. The local partners in these Innovation Deals should be credible, representative organisations bringing together the private sector, local government (e.g. Mayoral Combined Authorities in our big cities), and public sector R&D organisations, including universities.

What should government R&D be for?

By focusing too much on whether the government is going to hit or miss its target of £22 billion R&D spending, we forget that the purpose of R&D spending isn’t just to hit a numerical target, but to support some wider goals, to help solve some of the deeper problems that the country faces. I’d list those goals, not necessarily in order of importance, as follows.

I think the settlement of the science budget in the Comprehensive Spending Review takes us in the right direction. It’s a good settlement for the science community, but they aren’t its ultimate beneficiaries, and to achieve these goals we will need to do some things differently. In the words of Anthony Finkelstein, “We – ‘the science community’ – have done a deal. The sustained increase in R&D funding we have secured over several budgets is firmly based on a reciprocal promise: that we will deliver for UK prosperity.”

Edited 8 November, to correct graphs 1 & 2, which originally had 2027 rather than 2026 for the new target date for £22 bn