What’s happening to the economy of the USA? Is change accelerating, are we entering a new industrial revolution based on artificial intelligence and robotics, as the techno-optimists would have it it? Or is the USA settling down into a future of slow economic growth, with technological innovation declining in pace and impact compared to the innovations of the twentieth century? The last is the thesis of economist Robert Gordon, set out in a weighty new book, The Rise and Fall of American Growth.

The case he sets out for the phenomenon of stagnation is compelling, but I don’t think his analysis of the changing character of technological innovation is convincing, which makes him unable to offer any substantive remedies for the problem.

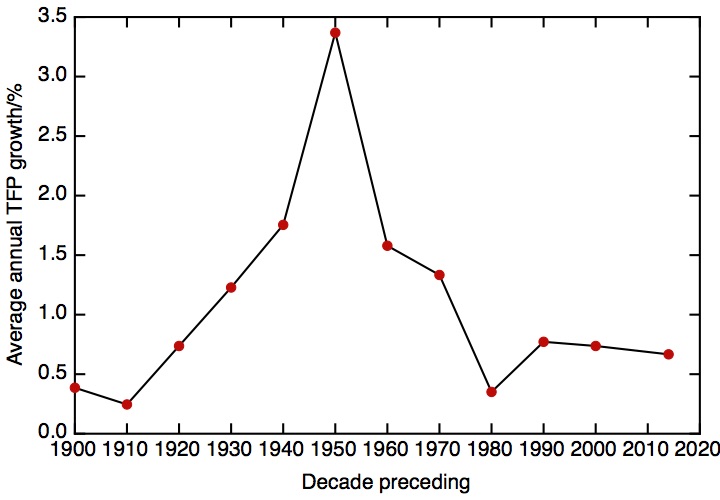

The Rise and Fall of American Growth. The average annual growth of total factor productivity – that part of economic growth not accounted for by increased inputs of labour and capital – over each decade leading up to the given date (14 years in the case of 2014). Data from R.J. Gordon, replotted from figure 16-5 of his book The Rise and Fall of American Growth.

The basis of the stagnation argument lies in the economic growth statistics. Put simply, the greatest period of economic growth in US history was in the mid-20th century.

Gordon’s more sophisticated analysis looks at total factor productivity – that part of economic growth that is not accounted for by extra inputs of labour and capital. Total factor productivity growth is generally interpreted as a measure of innovation in its widest sense. I’ve replotted his data in the plot, which shows very graphically the rise in American growth in the early part of twentieth century to its mid-century peak – and the subsequent fall of American growth to its current lacklustre levels. This part of the argument seems water-tight – the numbers don’t lie, and the economy of the USA is simply not growing as fast as it did fifty years ago. Gordon doesn’t discuss any other countries, but this seems to be a general phenomenon in advanced economies at the technological frontier now.

The bulk of Gordon’s book is an depth narrative history of the way in which the great technical innovations of the early twentieth century – electricity, the automobile, radio and telephones, modern housing and urban infrastructures, scientific medicine and so on – benefitted consumers through the century from 1870. This narrative has two purposes. Gordon states baldly that “every source of growth can be reduced back to the role of innovation and technological change”, and the narrative puts texture on the bald economic statistics, demonstrating across sector after sector how new technologies, and new modes of organisation, drove spectacular productivity growth.

The second purpose is to refute one frequently heard argument against the stagnation thesis – that economic statistics such as GDP do not capture all the benefits that modern information and communication technologies bring to consumers today, so the tailing off of economic growth that the figures show is misleading. Indeed, Gordon concedes, modern innovation does indeed lead to consumer benefits not captured in the GDP figures… but the same happened in the past, too, and the unmeasured benefits of earlier new technologies were very much greater than for today’s technologies. The benefits we gain now of having wider access to educational and entertainment resources through the internet are real, despite not being fully captured in GDP – but these unmeasured benefits are eclipsed by the benefits that accrued to our grandparents through not having a life of hard physical drudgery and a high probability of early death for oneself or one’s loved ones.

Where Gordon’s book is much less effective is in its account of the technical character of the innovations whose effects he describes (See Vaclav Smil’s books – Creating the Twentieth Century and Transforming the Twentieth Century – for a much better account of the nature and importance of those innovations), because he focuses on what the consumer sees, not the substructure of underpinning inventions that makes the visible inventions possible. This makes his prognosis for the future unconvincing.

This has been picked up by some reviewers – for example, Tyler Cowen in Is Innovation Over? The Case Against Pessimism takes the entirely reasonable position that we simply don’t know what’s going to turn up in the future by way of new inventions and new innovations. Diana Coyle comments that “One issue is the extent to which he ignores all but a limited range of digital innovation; low carbon energy, automated vehicles, new materials such as graphene, gene-based medicine etc. don’t feature”

I think Coyle is exactly right to highlight the very narrow focus Gordon has on software as the focus of innovation today, and I’ll return to this point shortly. However, I would also point out that the examples of new technologies that Coyle highlights are (with the possible exception of low carbon energy) are still in the development stage. We don’t know yet what their impact will be, we don’t even know that they will reach the market and fulfill their promises.

What determines what inventions happen, and whether any given invention actually goes anywhere, and is developed to the point where it results in an innovation that makes a difference to the economy? A great deal has to do with the wider institutions and cultures of innovation. The period covered in Gordon’s book wasn’t just a period in which invention flourished, but one in which there was a blossoming of a whole series of social innovations whose purpose was to support invention and technological innovation. Gordon mentions a few of these – Land Grant colleges, the National Bureau of Standards, Edison’s lab in Menlo Park, for example – but there’s little sense of the collective institutional landscape of innovation that this wave of social innovation produced, let alone the way that landscape has changed and evolved over the century.

The 1870’s saw the beginning of the first of these social innovations, the research university as we know it today. In the USA (consciously echoing similar initiatives in countries like the UK and Germany), Land Grant colleges were founded by Act of Congress, to promote learning related to “agriculture and the mechanic arts”. The resulting institutions include great state universities like Berkeley, as well as Cornell and MIT, while other private Universities, like Stanford, were founded through large scale philanthropy. Late nineteenth century vocational schools included the ancestors of Caltech and Carnegie Mellon, as well as countless others across the country which provided the technically educated workforce on which industrialisation and urbanisation depended.

The second social innovation – the industrial research and development laboratory – was perhaps even more significant. Edison’s Menlo Park laboratory is often thought of as a prototype industrial R&D lab, but the institution achieved full scale in the first half of the twentieth century, with institutions such as the General Electric Laboratory in Schenectady and du Pont’s Wilmington Experimental Station combining quite fundamental studies in physics and chemistry with the development of revolutionary new products. This model was taken to even greater heights after the war, most notably in Bell Labs, which produced both Nobel prize-winning fundamental science and world-changing technologies, like the transistor and the computer operating system UNIX.

Finally, the US government itself became increasingly involved in large scale R&D work. The importance of standards and metrology was recognised in the 1901 foundation of the National Bureau of Standards, but it was the military that provided the driving force for direct government involvement in technological innovation. The Naval Research Laboratory, founded in 1923, provided a forerunner for a massive network of national laboratories and research agencies, founded in the heat of the second world war but growing further though the cold war and the space race. The government labs were closely bound in to the research universities and the industrial R&D labs – MIT’s Radiation Laboratory was nominally part of the university, but grew to be a massive enterprise developing radar and other electronics for the military. And when the US Government identified Oak Ridge as a location for the R&D needed to produce materials for nuclear weapons at scale, it was to du Pont that they turned to set up and run the lab.

Gordon does have a theory about the evolution of the innovation landscape over the century – it’s based on his idea that there is a “U-shaped curve” of individualism in invention. According to this, we began in the 19th century with most inventions being made by individuals; as the twentieth century continued, it was within organisations such as R&D labs that most inventions were carried out. Gordon’s thesis is that the trend to collective innovation has swung back towards individual inventors once again. The first half of this proposition must surely be true, if only because the organisations that might employ inventors hadn’t then been invented. But for the second half, the only actual evidence that Gordon presents – from patent statistics – directly contradicts this theory, showing no recent growth in the patents awarded to individuals.

The origin of Gordon’s conviction that we are living in a new age of the individual, independent inventor is in the widespread conventional wisdom that the modern information and communication technology industry is the product of such lone geniuses. Gordon names three of these as exemplars of the individual inventors that created the ICT revolution (‘the third industrial revolution”) – Bill Gates, Steve Jobs and Mark Zuckerberg. But is it right to think of these, undoubtedly hugely gifted, figures as falling into the straightforward mould of individual inventors?

The reality is more complex and more interesting. Bill Gates – who got his start in business by writing, with Paul Allen, an interpreter for the already well-established computer programming language BASIC for the Altair home computer – was clearly a gifted programmer. His real brilliance, though, was his early understanding of how important standards – even de-facto ones – would be for this emerging industry, and in his ruthlessness in seizing control of those standards. It took entrepreneurial genius to buy in a 16-bit version of the operating system CP/M and sell it on to IBM for their PC, but by that time Microsoft was already an established business, which Gates went on to build up into an organisation that industrialised the process of writing software.

Unlike Gates, Steve Jobs was not a programmer or an engineer, and the Apple computer that launched his career wasn’t his invention – it was the creation of Steve Wozniak. The Apple wasn’t the first personal computer, but it was perhaps the first PC that looked like a consumer product rather than the outcome of a hobbyist’s kit. Jobs wasn’t an inventor – it’s tempting, but insufficient, to call him a marketeer, and he was hugely successful in that role. He wasn’t in a formal sense a designer either, though it’s clear that his design sensibility was central to his success. Rather he was a visionary of what technology would be able to do for consumers, and a leader who could create and run the teams that could deliver that vision.

Perhaps of these three Mark Zuckerberg is the closest match to the model of a lone inventor, with his conception and implementation of the social media platform Facebook. But this development, launched in 2004 and opened up to the general public 2006, comes too late in Gordon’s chronology to have an impact on his “third industrial revolution”.

If the names Gordon does cite don’t support his theory as much as he claims, perhaps one can learn even more about his theory’s weaknesses from the names he doesn’t cite. For example, here are another three figures who also have a claim to a part in the creation of the ICT revolution – Jack Kilby, Robert Noyce and Frank Wanlass. Kilby (at Texas Instruments) and Noyce (at Fairchild Semiconductor) invented the idea of the integrated circuit, thus setting into motion the half-century of electronics miniaturisation that has driven Moore’s law, and Wanlass (at Fairchild Semiconductor) invented the CMOS (for “complementary metal oxide silicon”) technology that underlies modern mass produced microprocessors. One could chose other names, of course, but the point I’d want to make is that the information technology revolution depended on hardware first and foremost, and this hardware needed to be invented and developed.

Of course, it is the cumulative progress of hardware developments that are summarised in Moore’s law, describing the exponential growth in computing power through the decades of the information age. Gordon discusses Moore’s law extensively, pointing out, correctly, that it has started to stall (as discussed in this recent piece in Nature – “The chips are down for Moore’s Law”). But for all the attention he gives to the invention and innovation that underlies this extraordinary story of rapid technological progress, one might take away the impression that there’s a semiconductor fairy who makes Moore’s Law possible (and who has perhaps got a little tired in recent years). There is, of course, no semiconductor fairy – every advance along Moore’s law is itself the result of all sorts of inventions, carried out in university laboratories, in the R&D labs of companies like Intel and IBM, and in the many companies which supply these chip makers with fabrication equipment, materials and chemicals. Some of these innovations are the incremental products of a large scale R&D operation, and quite a few are in themselves significant (and very clever) ideas that ought to have made their inventors famous – phase shift masks, chemically amplified resists, fin-FET/tri-gate transistors, to name but three. This is a world that is as far away from the 19th century individual inventor as it is possible to imagine.

By falling for the self-serving myth making of Silicon Valley, Gordon misses the chance to learn the right lessons from the ICT revolution. There’s a whole ecology of invention – a complex web of previous inventions that make possible what comes next, and the creation of a collective sense of what new inventions are needed to sustain the overall dynamic of innovation, and a will to focus resources and efforts on achieving those inventions. In the case of the development of Moore’s law, it was another social innovation – the International Technology Roadmap for Semiconductors – which orchestrated this ecology, but in few other areas of technological progress have matters been so effectively organised outside the bounds of a monolithic organisation.

Gordon makes a convincing case for the reality of mid-century fast productivity growth and current relative stagnation. But his superficial treatment of how technological innovation actually happens means that he is unable to say much about the causes of this stagnation – and in the absence of an analysis of the phenomenon, he isn’t in a position to propose any remedies. I don’t disagree with his diagnosis – “The timing of the stream of innovations before and after 1970 is the fundamental cause of the rise and fall of American growth”. But on the very next page (p643) he concludes that “the fostering of innovation is not a promising avenue for government policy intervention, as the American innovation machine operates healthily on its own.” But how well is this innovation machine operating, in reality? We should apply the test “by their fruits ye shall know them” – if the innovation machine is not producing the economic growth we want (not to mention producing the other important social goods that we need new innovation for, such as finding sustainable and affordable sources of low carbon energy) then perhaps the innovation machine isn’t so healthy after all.

One way of beginning an analysis of the source of the slow-down in innovation in the second half of the twentieth century would be to look at the institutions of innovation that provide the engine for the American innovation machine, and the way they have changed. To start with the research universities, the picture seems healthy – the research universities of the USA are as globally predominant now as they have ever been, despite rising competition from Europe and the Far East. Perhaps the biggest institutional change has been the consequence of the 1980 Baye-Dohl Act, assigning intellectual property rights from Federally funded research to the universities where the research was carried out.

An extensive network of government laboratories still exist, though the reach of agencies like NASA has been lessened. I’m not myself qualified to comment on how the role of national labs has changed, not least because much of their work remains cloaked in the secrecy of national security.

Where there does seem to have been large-scale change has been in the corporate research and development laboratories. The great post-war R&D labs like Bell Labs withered and died in response to changes in US corporate structure. Bell Labs was sustained through the monopoly rents given to its parent company; the deregulation of the 1980’s and the break-up of the Bell System put paid to those. Other corporate laboratories in the USA do survive – what is not clear is whether the increasing emphasis in US corporate governance towards maximising shareholder value has changed either the resources invested or the balance between short term development and longer term research. And what are in effect new corporate laboratories have emerged – notably that of Google – sustained on different types of monopoly rent. I’d like to see an evidence based study of how these changes have played out.

Meanwhile we have seen entirely new institutions of innovation – venture capital and the tech accelerator, for example. Asset bubbles have provided new sources of finance to exploit new technologies, often originally developed by the state, as described in Bill Janeway’s first-hand account “Doing Capitalism in the Innovation Economy”. And as I’ve described, the National Technology Roadmap for Semiconductors, subsequently globalised as the International Technology Roadmap for Semiconductors, has constituted an entirely new institution of innovation, which has for more than thirty years coordinated and driven the innovation needed to fulfill Moore’s law, though this period has now come to an end.

It’s clear that, at the same time as the institutions of innovation in the USA have been changing, the rate at which innovation turns into economic growth has been shrinking. Is this where we should look to find the origins of our current stagnation? I don’t know. But there’s one broad point on which I think Gordon is right. His chapter 16 concludes that what drove the massive burst of productivity in the 40’s and 50’s was the New Deal and the government directed wartime economy. It would be depressing to think that the only thing that can drive innovation and growth in our capitalist economies is a war, whether hot or cold, but perhaps we do need some sense of national, or global, purpose that goes beyond the workings of the market to recapture the glory days of American growth.