This is the second post in a series of three, considering the background to the forthcoming UK Government Semiconductor Strategy.

In the first part, The UK’s place in the semiconductor world, I discussed the new global environment, in which a tenser geopolitical situation has revived a policy climate around the world which is much more favourable to large scale government interventions in the industry, I sketched the global state of the semiconductor industry and tried to quantify the UK’s position in the semiconductor world.

Here, I discuss the past and future of semiconductors, mentioning some of the important past interventions by governments around the world that have shaped the current situation, and I speculate on where the industry might be going in the future.

Finally, in the third part, I’ll ask where this leaves the UK, and speculate on what its semiconductor strategy might seek to achieve.

Active industrial policy in the history of semiconductors

The history of the global semiconductor industry involves a dance between governments around the world and private companies. In contrast to the conviction of the predominantly libertarian ideology of Silicon Valley, the industry wouldn’t have come into existence and developed in the form we now know without a series of major, and expensive, interventions by governments across the world.

But, to caricature the claims of some on the left, there is an idea that it was governments that created the consumer electronic products we all rely on, and private industry has simply collected the profits. This view doesn’t recognise the massive efforts private industry has made, spending huge sums on the research and development needed to perfect manufacturing processes and bring them to market. Taking the USA alone, in 2022 US the government spent $6 billion on semiconductor R&D, compared to private industry’s $50.2 billion.

The semiconductor industry emerged in the 1960s in the USA, and in its early days more than half of its sales were to the US government. This was an early example of what we would now call “mission driven” innovation, motivated by a “moonshot project”. The “moonshot project” of the 1960s was driven by a very concrete goal – to be able to drop a half-tonne payload anywhere on the earth’s surface, with a precision measured in hundreds of meters.

Semiconductors were vital to achieve this goal – the first mass-produced computers based on integrated circuits were developed as the guidance systems of Minuteman intercontinental ballistic missiles. Of course, despite its military driving force, this “moonshot” produced important spin-offs – the development of space travel to the point at which a series of manned missions to the moon were possible, and increasing civilian applications of the more much cheaper, more powerful and more reliable computers that solid-state electronics made possible.

The USA is where the semiconductor industry started, but it played a central role in three East Asian development miracles. The first to exploit this new technology was Japan. While the USA was exploiting the military possibilities of semiconductors, Japan focused on their application in consumer goods.

By the early 1980’s, though, Japanese companies were producing memory chips more efficiently than the USA, while Nikon took a leading position in the photolithography equipment used to make integrated circuits. In part the Japanese competitive advantage was driven by their companies’ manufacturing prowess and their attentiveness to customer needs, but the US industry complained, not entirely without justification, that their success was built on the theft of intellectual property, access to unfairly cheap capital, the protection of home markets by trade barriers, and government funded research consortia bringing together leading companies. These are recurring ingredients of industrial policy as executed by East Asian developmental states, first executed successfully in Taiwan and in Korea, and now being applied on a continental scale by China.

An increasingly paranoid USA’s response to this threat from Japan to its technological supremacy in semiconductors was to adopt some industrial strategy measures itself. The USA relaxed its stringent anti-trust laws to allow US companies to collaborate in R&D through a consortium called SEMATECH, half funded by the federal government. Sematech was founded in 1987, and in the first 5 years of its operation was supported by $500 m of Federal funding, leading to some new self-confidence for the US semiconductor industry.

Meanwhile both Korea and Taiwan had identified electronics as a key sector through which to pursue their export-focused development strategies. For Taiwan, a crucial institution was the Industrial Technology Research Institute, in Hsinchu. Since its foundation in 1973, ITRI had been instrumental in supporting Taiwan’s industrial base in moving closer to the technology frontier.

In 1985 the US-based semiconductor executive Morris Chang was persuaded to lead ITRI, using this position to create a national semiconductor industry, in the process spinning out the Taiwan Semiconductor Manufacturing Company. TSMC was founded as a pure-play foundry, contract manufacturing integrated circuits designed by others and focusing on optimising manufacturing processes. This approach has been enormously successful, and has led TSMC to its globally leading position.

Over the last decade, China has been aggressively promoting its own semiconductor industry. The 2015 “Made in China 2025” identified semiconductors as a key sector for the development of a high tech manufacturing sector, setting the target of 70% self-sufficiency by 2025, and a dominant position in global markets by 2045.

Cheap capital for developing semiconductor manufacturing was provided through the state-backed National Integrated Circuit Industry Investment Fund, amounting to some $47 bn (though it seems the record of this fund has been marred by corruption allegations). The 2020 directive “Several Policies for Promoting the High-quality Development of the Integrated Circuit Industry and Software Industry in the New Era” reinforced these goals with a package of measures including tax breaks, soft loans, R&D and skills policies.

While the development of the semiconductor industry in Taiwan and Korea was generally welcomed by policy-makers in the West, a changing geopolitical climate has led to much more anxiety about China’s aspirations. The USA has responded by an aggressive programme of bans on the exports of semiconductor manufacturing tools, such as high end lithography equipment, to China, and has persuaded its allies in Japan and the Netherlands to follow suit.

Industrial policy in support of the semiconductor industry hasn’t been restricted to East Asia. In Europe a key element of support has been the development of research institutes bringing together consortia of industries and academia; perhaps the most notable of these is IMEC in Belgium, while the cluster of companies that formed around the electronics company Phillips in Eindhoven now includes the dominant player in equipment for extreme UV lithography, AMSL.

In Ireland, policies in support of inward investment, including both direct and indirect financial inducements, and the development of institutions to support skills innovation, persuaded Intel to base their European operations in Ireland. This has resulted in this small, formerly rural, nation becoming the second largest exporter of integrated circuits in Europe.

In the UK, government support for the semiconductor industry has gone through three stages. In the postwar period, the electronics industry was a central part of the UK’s Cold War “Warfare State”, with government institutions like the Royal Signals and Radar Establishment at Malvern carrying out significant early research in compound semiconductors and optoelectronics.

The second stage saw a more conscious effort to support the industry. In the mid-to-late 1970’s, a realisation of the potential importance of integrated circuits coincided with a more interventionist Labour government. The government, through the National Enterprise Board, took a stake in a start-up making integrated circuits in South Wales, Inmos. The 1979 Conservative government was much less interventionist than its predecessor, but two important interventions were made in the early 1980’s.

The first was the Alvey Programme, a joint government/private sector research programme launched in 1983. This was an ambitious programme of joint industry/government research, worth £350m, covering a number of areas in information and communication technology. The results of this programme were mixed; it played a significant role in the development of mobile telephony, and laid some important foundations for the development of AI and machine learning. In semiconductors, however, the companies it supported, such as GEC and Plessey, were unable to develop a lasting competitive position in semiconductor manufacturing and no longer survive.

The second intervention arose from a public education campaign ran by the BBC; a small Cambridge based microcomputer company, Acorn, won the contract to supply BBC-branded personal computers in support of this programme. The large market created in this way later gave Acorn the headroom to move into the workstation market with reduced instruction set computing architectures, from which was spun-out the microprocessor design house ARM.

In the third stage, the UK government adopted a market fundamentalist position. This involved a withdrawal from government support for applied research and the run-down of government laboratories like RSRE, and a position of studied indifference about the acquisition of UK technology firms by overseas rivals. Major UK electronics companies, such as GEC and Plessey, collapsed following some ill-judged corporate misadventures. Inmos was sold, first to Thorn, then to the Franco- Italian group, SGS Thomson. Inmos left a positive legacy, with many who had worked there going on to participate in a Bristol based cluster of semiconductor design houses. The Inmos manufacturing site survives as Newport Wafer Fab, currently owned by the Dutch-based, Chinese owned company Nexperia, though its future is uncertain following a UK government ruling that Nexperia should divest its shareholding on national security grounds.

This focus on the role of interventions by governments across the world at crucial moments in the development of the industry shouldn’t overshadow the huge investments in R&D made by private companies around the world. A sense of the scale of these investments is given by the figure below.

R&D expenditure in the microelectronics industry, showing Intel’s R&D expenditure, and a broader estimate of world microelectronics R&D including semiconductor companies and equipment manufacturers. Data from the “Are Ideas Getting Harder to Find?” dataset on Chad Jones’s website. Inflation corrected using the US GDP deflator.

The exponential increase in R&D spending up to 2000 was driven by a similarly exponential increase in worldwide semiconductor sales. In this period, there was a remarkable virtuous circle of increasing sales, leading to increasing R&D, leading in turn to very rapid technological developments, driving further sales growth. In the last two decades, however, growth in both sales and in R&D spending has slowed down

![]()

Global semiconductor sales in billions of dollars. Plot from “Quantum Computing: Progress and Prospects” (2019), National Academies Press, which uses data from the Semiconductor Industry Association.

Possible futures for the semiconductor industry

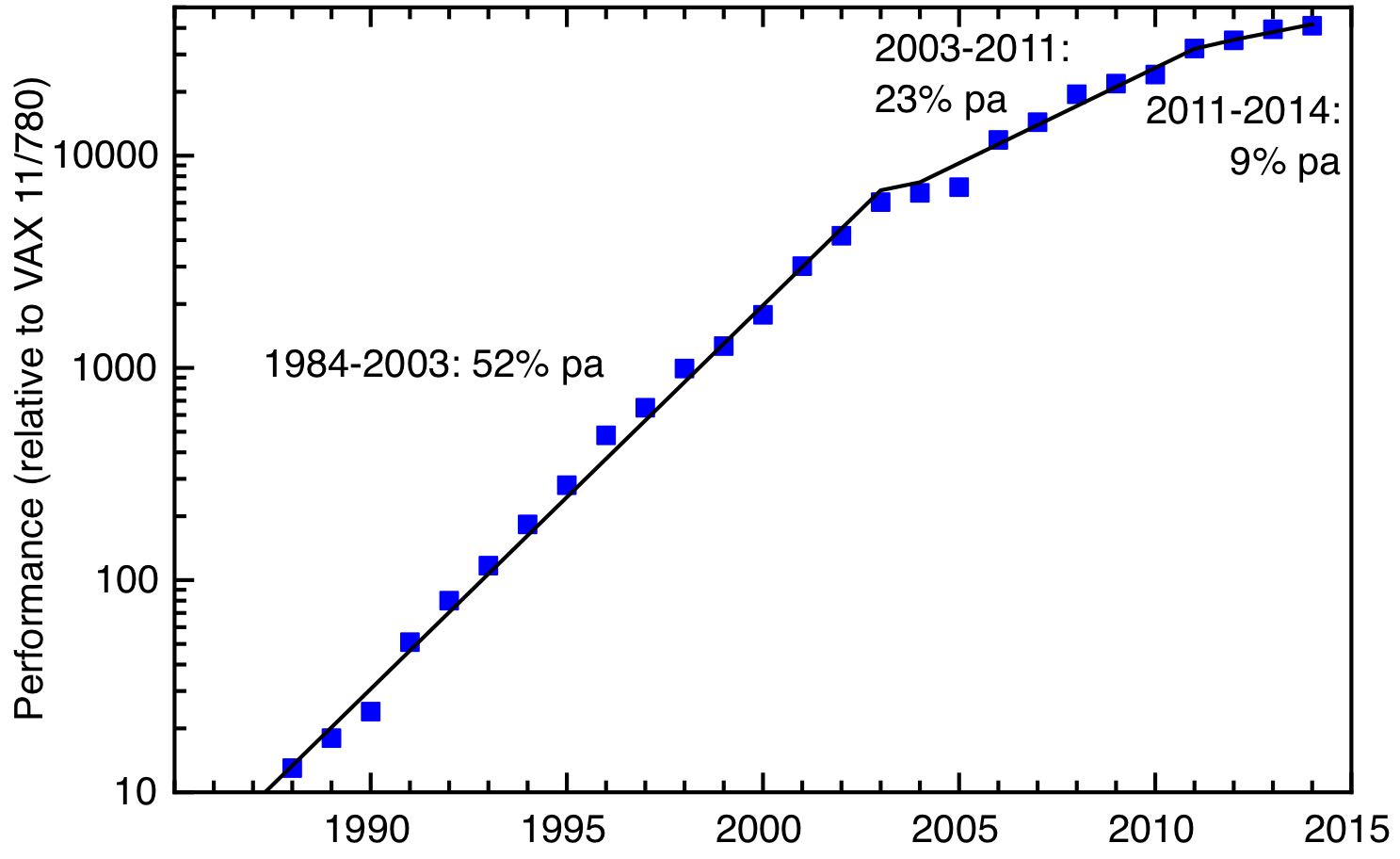

The rate of technological progrèss in integrated circuits between 1984 and 2003 was remarkable and unprecedented in the history of technology. This drove an exponential increase in microprocessor computing power, which grew by more than 50% a year. This growth arose from two factors, As is well-known, the number of transistors on a silicon chip grew exponentially, as predicted by Moore’s Law. This was driven by many unsung, but individually remarkable, technological innovations in lithography (to name just a couple of examples, phase shift lithography, and chemically amplified resists), allowing smaller and smaller features to be manufactured.

The second factor is less well known – by a phenomenon known as Dennard scaling, as transistors get smaller they operate faster. Dennard scaling reached its limit around 2004, as the heat generated by microprocessors became a limiting factor. After 2004, microprocessor computer power increased at a slower rate, driven by increasing the number of cores and parallelising operations, resulting in rates of increase around 23% a year. This approach itself ran into diminishing returns after 2011.

Currently we are seeing continued reductions in feature sizes, together with new transistor designs, such as finFETs, which in effect allow more transistors to be fitted into a given area by building them side-on. But further increases in computer power are increasingly being driven by optimising processor architectures for specific tasks, for example graphical processing units and specialised chips for AI, and by simply multiplying the number of microprocessors in the server farms that underlie cloud computing.

Slowing growth in computer power. The growth in processor performance since 1988. Data from figure 1.1 in Computer Architecture: A Quantitative Approach (6th edn) by Hennessy & Patterson.

It’s remarkable that, despite the massive increase in microprocessor performance since the 1970’s, and major innovations in manufacturing technology, the underlying mode of operation of microprocessors remains the same. This is known by the shorthand of CMOS, for Complementary Metal Oxide Semiconductor. Logic gates are constructed from complementary pairs of field effect transistors consisting of a channel in heavily doped silicon, whose conductance is modulated by the application of an electric field across an insulating oxide layer from a metal gate electrode.

CMOS isn’t the only way of making a logic gate, and it’s not obvious that it is the best one. One severe limitation on our computing is its energy consumption. This matters at a micro level; the heat generated by a laptop or mobile phone is very obvious, and it was problems of heat dissipation that underlay the slowdown in the growth in microprocessor power around 2004. It’s also significant at a global level, where the energy used by cloud computing is becoming a significant share of total electricity consumption.

There is a physical lower limit to the energy that computing uses – this is the Landauer limit on the energy cost of a single logical operation, a consequence of the second law of thermodynamics. Our current technology consumes more than three orders of magnitude more energy than is theoretically possible, so there is room for improvement. Somewhere in the universe of technologies that don’t exist, but are physically possible, lies a superior computing technology to CMOS.

Many alternative forms of computing have been tried out in the laboratory. Some involve different materials to silicon: compound semiconductors or new forms of carbon like nanotubes and graphene. In some, the physical embodiment of information is, not electric charge, but spin. The idea of using individual molecules as circuit elements – molecular electronics – has a long and somewhat chequered history. None of these approaches has yet made a significant commercial impact; incumbent technologies are always hard to displace. CMOS and its related technologies amount to a deep nanotechnology implemented at a massive scale; the huge investment in this technology has in effect locked us into a particular technology path.

There are alternative, non-semiconductor based, computing paths that are worth mentioning, because they may become important in the future. One is to copy biology; our own brains deliver enormous computing power at remarkably low energy cost, with an architecture that is very different from the von Neumann architecture that human-built computers follow, and a basic unit that is molecular. Various radical approaches to computing take some inspiration from biology, whether that is the new architectures for CMOS that underlie neuromorphic computing, or entirely molecular approaches based on DNA.

Quantum computing, on the other hand, offers the potential for another exponential leap forward in computing power – in principle. Many practical barriers remain before this potential can be turned into practise, however, and this is a topic for another discussion. Suffice it to say that, on a timescale of a decade or so, quantum computers will not replace conventional computers for anything more than some niche applications, and in any case they are likely to be deployed in tandem with conventional high performance computers, as accelerators for specific tasks, rather than as general purpose computers.

Finally, I should return to the point that semiconductors aren’t just valuable for computing; the field of power electronics is likely to become more and more important as we move to a net zero energy system. We will need a much more distributed and flexible energy grid to accommodate decentralised renewable sources of electricity, and this needs solid-state power electronics capable of handling very high voltages and currents – think of replacing house-size substations by suitcase-size solid-state transformer. Widespread uptake of electric vehicles and the need for widely available rapid charging infrastructures will place further demands on power electronics. Silicon is not suitable for these applications, which require wide-band gap semiconductors such as diamond, silicon carbide and other compound semiconductors.

Sources

Chip War: The Fight for the World’s Most Critical Technology, by Chris Miller, is a great overview of the history of this technology.

Semiconductors in the UK: Searching for a strategy. Geoffrey Owen, Policy Exchange, 2022. Very good on the history of the UK industry.

To Every Thing There is a Season – lessons from the Alvey Programme for Creating an Innovation Ecosystem for Artificial Intelligence, by Luke Georghiou. Reflections on the Alvey Programme by one of the researchers who carried out its official evaluation.

Are Ideas getting hard to find, Bloom, Jones, van Reenan and Webb. American Economic Review (2020). An influential paper on diminishing rates of return on R&D, taking the semiconductor industry as a case study.

“Quantum Computing: Progress and Prospects (2019), National Academies Press.

Up next: What should the UK do about semiconductors? Part 3: towards a UK semiconductor strategy