A couple of weeks ago I took part in a dialogue meeting in Brussels organised by the CIAA, the Confederation of the Food and Drink Industries of the EU, about nanotechnology in food. The meeting involved representatives from big food companies, from the European Commission and agencies like the European Food Safety Association, together with consumer groups like BEUC, and the campaigning group Friends of the Earth Europe. The latter group recently released a report on food nanotechnology – Out of the laboratory and on to our plates: Nanotechnology in food and agriculture; according to the press release, this “reveals that despite concerns about the toxicity risks of nanomaterials, consumers are unknowingly ingesting them because regulators are struggling to keep pace with their rapidly expanding use.” The position of the CIAA is essentially that nanotechnology is an interesting technology currently in research rather than having yet made it into products. One can get a good idea of the research agenda of the European food industry from the European Technology Platform Food for Life. As the only academic present, I tried in my contribution to clarify a little the different things people mean by “food nanotechnology”. Here, more or less, is what I said.

What makes the subject of nanotechnology particularly confusing and contentious is the ambiguity of the definition of nanotechnology when applied to food systems. Most people’s definitions are something along the lines of “the purposeful creation of structures with length scales of 100 nm or less to achieve new effects by virtue of those length-scales”. But when one attempts to apply this definition in practise one runs into difficulties, particularly for food. It’s this ambiguity that lies behind the difference of opinion we’ve heard about already today about how widespread the use of nanotechnology in foods is already. On the one hand, Friends of the Earth says they know of 104 nanofood products on the market already (and some analysts suggest the number may be more than 600). On the other hand, the CIAA (the Confederation of Food and Drink Industries of the EU) maintains that, while active research in the area is going on, no actual nanofood products are yet on the market. In fact, both parties are, in their different ways, right; the problem is the ambiguity of definition.

The issue is that food is naturally nano-structured, so that too wide a definition ends up encompassing much of modern food science, and indeed, if you stretch it further, some aspects of traditional food processing. Consider the case of “nano-ice cream”: the FoE report states that “Nestlé and Unilever are reported to be developing a nano- emulsion based ice cream with a lower fat content that retains a fatty texture and flavour”. Without knowing the details of this research, what one can be sure of is that it will involve essentially conventional food processing technology in order to control fat globule structure and size on the nanoscale. If the processing technology is conventional (and the economics of the food industry dictates that it must be), what makes this nanotechnology, if anything does, is the fact that analytical tools are available to observe the nanoscale structural changes that lead to the desirable properties. What makes this nanotechnology, then, is simply knowledge. In the light of the new knowledge that new techniques give us, we could even argue that some traditional processes, which it now turns out involve manipulation of the structure on the nanoscale to achieve some desirable effects, would constitute nanotechnology if it was defined this widely. For example, traditional whey cheeses like ricotta are made by creating the conditions for the whey proteins to aggregate into protein nanoparticles. These subsequently aggregate to form the particulate gels that give the cheese its desirable texture.

It should be clear, then, that there isn’t a single thing one can call “nanotechnology” – there are many different technologies, producing many different kinds of nano-materials. These different types of nanomaterials have quite different risk profiles. Consider cadmium selenide quantum dots, titanium dioxide nanoparticles, sheets of exfoliated clay, fullerenes like C60, casein micelles, phospholipid nanosomes – the risks and uncertainties of each of these examples of nanomaterials are quite different and it’s likely to be very misleading to generalise from any one of these to a wider class of nanomaterials.

To begin to make sense of the different types of nanomaterial that might be present in food, there is one very useful distinction. This is between engineered nanoparticles and self-assembled nanostructures. Engineered nanoparticles are covalently bonded, and thus are persistent and generally rather robust, though they may have important surface properties such as catalysis, and they may be prone to aggregate. Examples of engineered nanoparticles include titanium dioxide nanoparticles and fullerenes.

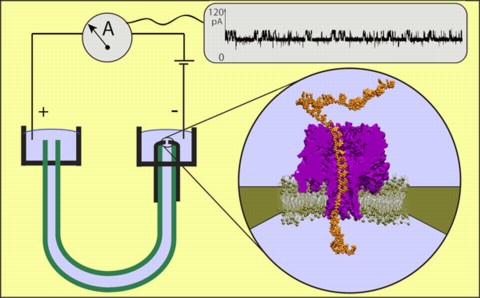

In self-assembled nanostructures, though, molecules are held together by weak forces, such as hydrogen bonds and the hydrophobic interaction. The weakness of these forces renders them mutable and transient; examples include soap micelles, protein aggregates (for example the casein micelles formed in milk), liposomes and nanosomes and the microcapsules and nanocapsules made from biopolymers such as starch.

So what kind of food nanotechnology can we expect? Here are some potentially important areas:

• Food science at the nanoscale. This is about using a combination of fairly conventional food processing techniques supported by the use of nanoscale analytical techniques to achieve desirable properties. A major driver here will be the use of sophisticated food structuring to achieve palatable products with low fat contents.

• Encapsulating ingredients and additives. The encapsulation of flavours and aromas at the microscale to protect delicate molecules and enable their triggered or otherwise controlled release is already widespread, and it is possible that decreasing the lengthscale of these systems to the nanoscale might be advantageous in some cases. We are also likely to see a range of “nutriceutical” molecules come into more general use.

• Water dispersible preparations of fat-soluble ingredients. Many food ingredients are fat-soluble; as a way of incorporating these in food and drink without fat manufacturers have developed stable colloidal dispersions of these materials in water, with particle sizes in the range of hundreds of nanometers. For example, the substance lycopene, which is familiar as the molecule that makes tomatoes red and which is believed to offer substantial health benefits, is marketed in this form by the German company BASF.

What is important in this discussion is clarity – definitions are important. We’ve seen discrepancies between estimates of how widespread food nanotechnology is in the marketplace now, and these discrepancies lead to unnecessary misunderstanding and distrust. Clarity about what we are talking about, and a recognition of the diversity of technologies we are talking about, can help remove this misunderstanding and give us a sound basis for the sort of dialogue we’re participating in today.