Next week – on the 26th March – I’m participating in a discussion event sponsored by the thinktank Policy Exchange at NESTA, in London. Also on the panel is K. Eric Drexler, the originator of the idea of nanotechnology in its most expansive form, as an emerging technology which, when fully developed, will have truly transformational effects. It will, in this view, allow us to make pretty much any material, device or artefact for little or no cost, we will be able to extend human lifespans almost indefinitely using cell-by-cell surgery, and we will create computers so powerful that they will host artificial intelligences greatly superior to those of humans. Drexler has a new book coming out in May – Radical Abundance: How a Revolution in Nanotechnology Will Change Civilization. I think this view overstates the potential of the technology, and (it shocks me to realise), I have been arguing this in some technical detail for nearly ten years. Although I have met Drexler, and corresponded with him, this is the first time I will have shared a platform with him. To mark this occasion I have gone through my blog’s archives to make this anthology of my writings about Drexler’s vision of nanotechnology and my arguments with some of its adherents (who should not, of course, automatically be assumed to speak for Drexler himself). Continue reading “Nanotechnology, K. Eric Drexler and me”

Category: Radical nanotechnology and MNT

Feynman, Drexler, and the National Nanotechnology Initiative

It’s fifty years since Richard Feynman delivered his famous lecture “There’s Plenty of Room at the Bottom”, and this has been the signal for a number of articles reflecting on its significance. This lecture has achieved mythic importance in discussions of nanotechnology; to many, it is nothing less than the foundation of the field. This myth has been critically examined by Chris Tuomey (see this earlier post), who finds that the significance of the lecture is something that’s been attached retrospectively, rather than being apparent as serious efforts in nanotechnology got underway.

There’s another narrative, though, that is popular with followers of Eric Drexler. According to this story, Feynman laid out in his lecture a coherent vision of a radical new technology; Drexler popularised this vision and gave it the name “nanotechnology”. Then, inspired by Drexler’s vision, the US government launched the National Nanotechnology Initiative. This was then hijacked by chemists and materials scientists, whose work had nothing to do with the radical vision. In this way, funding which had been obtained on the basis of the expansive promises of “molecular manufacturing”, the Feynman vision as popularized by Drexler, has been used to research useful but essentially mundane products like stain resistant trousers and germicidal washing machines. To add insult to injury, the material scientists who had so successfully hijacked the funds then went on to belittle and ridicule Drexler and his theories. A recent article in the Wall Street Journal – “Feynman and the Futurists” – by Adam Keiper, is written from this standpoint, in a piece that Drexler himself has expressed satisfaction with on his own blog. I think this account is misleading at almost every point; the reality is both more complex and more interesting.

To begin with, Feynman’s lecture didn’t present a coherent vision at all; instead it was an imaginative but disparate set of ideas linked only by the idea of control on a small scale. I discussed this in my article in the December issue of Nature Nanotechnology – Feynman’s unfinished business (subscription required), and for more details see this series of earlier posts on Soft Machines (Re-reading Feynman Part 1, Part 2, Part 3).

Of the ideas dealt with in “Plenty of Room”, some have already come to pass and have indeed proved economically and societally transformative. These include the idea of writing on very small scales, which underlies modern IT, and the idea of making layered materials with precisely controlled layer thicknesses on the atomic scale, which was realised in techniques like molecular beam epitaxy and CVD, whose results you see every time you use a white light emitting diode or a solid state laser of the kind your DVD contains. I think there were two ideas in the lecture that did contribute to the vision popularized by Drexler – the idea of “a billion tiny factories, models of each other, which are manufacturing simultaneously, drilling holes, stamping parts, and so on”, and, linked to this, the idea of doing chemical synthesis by physical processes. The latter idea has been realised at proof of principle level by the idea of doing chemical reactions using a scanning tunnelling microscope; there’s been a lot of work in this direction since Don Eigler’s demonstration of STM control of single atoms, no doubt some of it funded by the much-maligned NNI, but so far I think it’s fair to say this approach has turned out so far to be more technically difficult and less useful (on foreseeable timescales) than people anticipated.

Strangely, the second part of the fable, which talks about Drexler popularising the Feynman vision, I think actually underestimates the originality of Drexler’s own contribution. The arguments that Drexler made in support of his radical vision of nanotechnology drew extensively on biology, an area that Feynman had touched on only very superficially. What’s striking if one re-reads Drexler’s original PNAS article and indeed Engines of Creation is how biologically inspired the vision is – the models he looks to are the protein and nucleic acid based machines of cell biology, like the ribosome. In Drexler’s writing now (see, for example, this recent entry on his blog), this biological inspiration is very much to the fore; he’s looking to the DNA-based nanotechnology of Ned Seeman, Paul Rothemund and others as the exemplar of the way forward to fully functional, atomic scale machines and devices. This work is building on the self-assembly paradigm that has been such a big part of academic work in nanotechnology around the world.

There’s an important missing link between the biological inspiration of ribosomes and molecular motors and the vision of “tiny factories”- the scaled down mechanical engineering familiar from the simulations of atom-based cogs and gears from Drexler and his followers. What wasn’t fully recognised until after Drexler’s original work, was that the fundamental operating principles of biological machines are quite different from the rules that govern macroscopic machines, simply because the way physics works in water at the nanoscale is quite different to the way it works in our familiar macroworld. I’ve argued at length on this blog, in my book “Soft Machines”, and elsewhere (see, for example, “Right and Wrong Lessons from Biology”) that this means the lessons one should draw from biological machines should be rather different to the ones Drexler originally drew.

There is one final point that’s worth making. From the perspective of Washington-based writers like Kepier, one can understand that there is a focus on the interactions between academic scientists and business people in the USA, Drexler and his followers, and the machinations of the US Congress. But, from the point of view of the wider world, this is a rather parochial perspective. I’d estimate that somewhere between a quarter and a third of the nanotechnology in the world is being done in the USA. Perhaps for the first time in recent years a major new technology is largely being developed outside the USA, in Europe to some extent, but with an unprecedented leading role being taken in places like China, Korea and Japan. In these places the “nanotech schism” that seems so important in the USA simply isn’t relevant; people are just pressing on to where the technology leads them.

Happy New Year

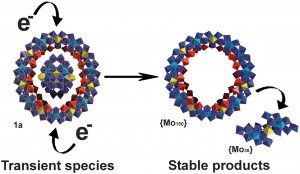

Here are a couple of nice nano-images for the New Year. The first depicts a nanoscale metal-oxide donut, whose synthesis is reported in a paper (abstract, subscription required for full article) in this week’s Science Magazine. The paper, whose first author is Haralampos Miras, comes from the group of Lee Cronin at the University of Glasgow. The object is made by templated self-assembly of molybdenum oxide units; the interesting feature here is that the cluster which forms the template for the ring – the “hole” around which the donut forms – forms as a precursor during the process before being ejected from the ring once it is formed.

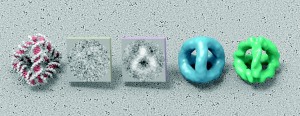

The second image depicts the stages in reconstructing a high resolution electron micrograph of a self-assembled tetrahedron made from DNA. In an earlier blog post I described how Russell Goodman, a grad student in the group of Andrew Turberfield at Oxford, was able to make rigid tetrahedra of DNA less than 10 nm in size. Now, in collaboration with Takayuki Kato and Keiichi Namba group at Osaka University, they have been able to obtain remarkable electron micrographs of these structures. The work was published last summer in an article in Nano Letters (subscription required). The figure shows, from left to right, the predicted structure, a raw micrograph obtained from cryo-TEM (transmission electron microscopy on frozen sections), a micrograph processed to enhance its contrast, and two three dimensional image reconstructions obtained from a large number of such images. The sharpest image, on the right, is at a 12 Å resolution, and it is believed that this is the smallest object, natural or artificial, that has been imaged using cryo-TEM at this resolution, which is good enough to distinguish between the major and minor grooves of the DNA helices that form the struts of the tetrahedron.

A happy New Year to all readers.

Soft machines and robots

Robots is a website featuring regular podcasts about various aspects of robotics; currently it’s featuring a podcast of an interview with me by Sabine Hauert, from EPFL’s Laboratory of Intelligent Systems. This was prompted by my talk at the IEEE Congress on Evolutionary Computing, which essentially was about how to build a nanobot. Regular readers of this blog will not be surprised to hear that a strong theme of both interview and talk is the need to take inspiration from biology when designing “soft machines”, which need to be optimised for the special, and to us very unfamiliar, physics of the nanoworld, rather than using inappropriate design principles derived from macroscopic engineering. For more on this, the interested reader might like to take a look at my earlier essay, “Right and wrong lessons from biology”.

Nanobots, nanomedicine, Kurzweil, Freitas and Merkle

As Tim Harper observes, with the continuing publicity surrounding Ray Kurzweil, it seems to be nanobot week. In one further contribution to the genre, I’d like to address some technical points made by Rob Freitas and Ralph Merkle in response to my article from last year, Rupturing the Nanotech Rapture, in which I was critical of their vision of nanobots (my thanks to Rob Freitas for bringing their piece to my attention in a comment on my earlier entry). Before jumping straight into the technical issues, it’s worth trying to make one point clear. While I think the vision of nanobots that underlies Kurzweil’s extravagant hopes is flawed, the enterprise of nanomedicine itself has huge promise. So what’s the difference?

We can all agree on why nanotechnology is potentially important for medicine. The fundamental operations of cell biology all take place on the nanoscale, so if we wish to intervene in those operations, there is a logic to carrying out these interventions at the right scale, the nanoscale. But the physical environment of the warm, wet nano-world is a very unfamiliar one, dominated by violent Brownian motion, the viscosity dominated regime of low Reynolds number fluid dynamics, and strong surface forces. This means that the operating principles of cell biology rely on phenomena that are completely unfamiliar in the macroscale world – phenomena like self-assembly, molecular recognition, molecular shape change, diffusive transport and molecule-based information processing. It seems to me that the most effective interventions will use the same “soft nanotechnology” paradigm, rather than being based on a mechanical paradigm that underlies the Freitas/Merkle vision of nanobots, which is inappropriate for the warm wet nanoscale world that our biology works in. We can expect to see increasingly sophisticated drug delivery devices, targeted to the cellular sites of disease, able to respond to their environment, and even able to perform simple molecule-based logical operations to decide appropriate responses to their situation. This isn’t to say that nanomedicine of any kind is going to be easy. We’re still some way away from being able to completely disentangle the sheer complexity of the cell biology that underlies diseases such as cancer or rheumatoid arthritis, while for other hugely important conditions like Alzheimer’s there isn’t even consensus on the ultimate cause of the disease. It’s certainly reasonable to expect improved treatments and better prospects for sufferers of serious diseases, including age-related ones, in twenty years or so, but this is a long way from the prospects of seamless nanobot-mediated neuron-computer interfaces and indefinite life-extension that Kurzweil hopes for.

I now move on to the specific issues raised in the response from Freitas and Merkle.

Several items that Richard Jones mentions are well-known research challenges, not showstoppers.

Until the show has actually started, this of course is a matter of opinion!

All have been previously identified as such along with many other technical challenges not mentioned by Jones that we’ve been aware of for years.

Indeed, and I’m grateful that the cited page acknowledges my earlier post Six Challenges for Molecular Nanotechnology. However, being aware of these and other challenges doesn’t make them go away.

Unfortunately, the article also evidences numerous confusions: (1) The adhesivity of proteins to nanoparticle surfaces can (and has) been engineered;

Indeed, polyethylene oxide/glycol end-grafted polymers (brushes) are commonly used to suppress protein adsorption at liquid/solid interfaces (and less commonly, brushes of other water soluble polymers, as in the link, can be used). While these methods work pretty well in vitro, they don’t work very well in vivo, as evidenced by the relatively short clearing times of “stealth” liposomes, which use a PEG layer to avoid detection by the body. The reasons for this are still aren’t clear, as the fundamental mechanisms by which brushes suppress protein adsorption aren’t yet fully understood.

(2) nanorobot gears will reside within sealed housings, safe from exposure to potentially jamming environmental bioparticles;

This assumes that “feed-throughs” permitting traffic in and out of the controlled environment while perfectly excluding contaminants are available (see point 5 of my earlier post Six Challenges for Molecular Nanotechnology). To date I don’t see a convincing design for these.

(3) microscale diamond particles are well-documented as biocompatible and chemically inert;

They’re certainly chemically inert, but the use of “biocompatible” here betrays a misunderstanding; the fact that proteins adsorb to diamond surfaces is experimentally verified and to be expected. Diamond-like carbon is used as a coating in surgical implants and stents and is biocompatible in the sense that it doesn’t cause cytotoxicity or inflammatory reactions. It’s biocompatibility with blood is also good, in the sense that it doesn’t lead to thrombus formation. But this isn’t because proteins don’t adsorb to the surface; it is because there’s a preferential adsorption of albumin rather than fibrinogen, which is correlated with a lower tendency of platelets to attach to the surface (see e.g. R. Hauert, Diamond and Related Materials 12 (2003) 583). For direct experimental measurements of protein adsorption to an amorphous diamond-like film see, for example, here. Almost all this work has been done, not on single crystal diamond, but on polycrystalline or amorphous diamond-like films, but there’s no reason to suppose the situation will be any different for single crystals; these are simply hydrophobic surfaces of the kind that proteins all too readily adsorb to.

(4) unlike biological molecular motors, thermal noise is not essential to the operation of diamondoid molecular motors;

Indeed, in contrast to the operation of biological motors, which depend on thermal noise, noise is likely to be highly detrimental to the operation of diamondoid motors. Which, to state the obvious, is a difficulty in the environment of the body where such thermal noise is inescapable.

(5) most nanodiamond crystals don’t graphitize if properly passivated;

Depends what you mean by most, I suppose. Raty et al. (Phys Rev Letts 90 art037401, 2003) did quantum simulation calculations showing that 1.2 nm and 1.4 nm ideally terminated diamond particles would undergo spontaneous surface reconstruction at low temperature. The equilibrium surface structure will depend on shape and size, of course, but you won’t know until you do the calculations or have some experiments.

(6) theory has long supported the idea that contacting incommensurate surfaces should easily slide and superlubricity has been demonstrated experimentally, potentially allowing dramatic reductions in friction inside properly designed rigid nanomachinery;

Superlubricity is an interesting phenomenon in which friction falls to very low (though probably non-zero) values when rigid surfaces are put together out of crystalline register and slide past one another. The key sentence above is “properly designed rigid nanomachinery”. Diamond has very low friction macroscopically because it is very stiff, but nanomachines aren’t going to be built out of semi-infinite blocks of the stuff. Measured by, for example, the average relative thermal displacements observed at 300K diamondoid nanomachines are going to be rather floppy. It remains to be seen how important this is going to be in permitting leakage of energy out of the driving modes of the machine into thermal energy, and we need to see some simulations of dynamic friction in “properly designed rigid nanomachinery”.

(7) it is hardly surprising that nanorobots, like most manufactured objects, must be fabricated in a controlled environment that differs from the application environment;

This is a fair point as far as it goes. But consider why it is that an integrated circuit, made in a controlled ultra-clean environment, works when it is brought out into the scruffiness of my office. It’s because it can be completely sealed off, with traffic in and out of the IC carried out entirely by electrical signals. Our nanobot, on the other hand, will need to communicate with its environment by the actual traffic of molecules, hence the difficulty of the feed-through problem referred to above.

(8) there are no obvious physical similarities between a microscale nanorobot navigating inside a human body (a viscous environment where adhesive forces control) and a macroscale rubber clock bouncing inside a clothes dryer (a ballistic environment where inertia and gravitational forces control);

The somewhat strained nature of this simile illustrates the difficulty of conceiving the very foreign and counter-intuitive nature of the warm, wet, nanoscale world. This is exactly why the mechanical engineering intuitions that underlie the diamondoid nanobot vision are so misleading.

and (9) there have been zero years, not 15 years, of “intense research” on diamondoid nanomachinery (as opposed to “nanotechnology”). Such intense research, while clearly valuable, awaits adequate funding

I have two replies to this. Firstly, even accepting the very narrow restriction to diamondoid nanomachinery, I don’t see how the claim of “zero years” squares with what Freitas and Merkle have been doing themselves, as I know that both were employed as research scientists at Zyvex, and subsequently at the Institute of Molecular Manufacturing. Secondly, there has been a huge amount of work in nanomedicine and nanoscience directly related to these issues. For example, the field of manipulation and reaction of individual atoms on surfaces directly underlies the visions of mechanosynthesis that are so important to the Freitas/Merkle route to nanotechnology dates back to Don Eigler’s famous 1990 Nature paper; this paper has since been cited by more than 1300 other papers, which gives an indication of how much work there’s been in this area worldwide.

— as is now just beginning.

And I’m delighted by Philip Moriarty’s fellowship too!

I’ve responded to these points at length, since we frequently read complaints from proponents of MNT that no-one is prepared to debate the issues at a technical level. But I do this with some misgivings. It’s very difficult to prove a negative, and none of my objections amounts to a proof of physical impossibility. But what is not forbidden by the laws of physics is not necessarily likely, let alone inevitable. When one is talking about such powerful human drives as the desire not to die, and the urge to reanimate deceased loved ones, it’s difficult to avoid the conclusion that rational scepticism may be displaced by deeper, older human drives.

The Singularity gets a University

There’s been a huge amount of worldwide press coverage of the news that Ray Kurzweil has launched a “Singularity University”, to promote his vision (not to mention his books and forthcoming film) of an exponential growth in technology leading to computers more intelligent than humans and an end to aging and death. The coverage is largely uncritical – even the normally sober Financial Times says only that some critics think that the Singularity may be dangerous. To the majority of critics, though, the idea isn’t so much dangerous as completely misguided.

The Guardian, at least, quotes the iconic cognitive science and computer researcher Douglas Hofstadter as saying that Kurzweil’s ideas included “the craziest sort of dog excrement”, which is graphic, if not entirely illuminating. For a number of more substantial critiques, take a look at the special singularity issue of the magazine IEEE Spectrum, published last summer. Unsurprisingly, the IEEE blog takes a dim view.

Many of the press reports refer to the role of nanotechnology in Kurzweil’s vision of the singularity – according to the Guardian, for example, “Kurzweil predicts the creation of “nanobots” that will patrol our bloodstreams, repairing wear and tear as they go, and keeping our bodies perpetually young.” It was this vision that I criticised in my own contribution to the IEEE Singularity special, Rupturing the Nanotech Rapture; I notice that the main promoters of these ideas, Robert Freitas and Ralph Merkle, are among the founding advisors. At the time, I found it interesting in the responses to my article, that a number of self-identified transhumanists and singularitarians attempted to distance themselves from Kurzweil’s views, characterising them as atypical of their movement. It will be interesting to see how strenuously they now attempt to counter what seems to be a PR coup by Kurzweil.

It’s worth stressing that what’s been established isn’t really a university; it’s not going to do research and it won’t give degrees. Instead, it will offer 3-day, 10-day and 9 week courses, where, to quote from the website, one could imagine, for example, that issues such as global poverty, hunger, climate crisis could be studied from an interdisciplinary standpoint where the power of artificial intelligence, nanotechnology, genomics, etc are brought to bare in a cooperate fashion to seek solutions” (sic). Singularitarianism is an ideology, and this is a vehicle to promote it.

Among the partners in the venture, Google has succeeded in getting a huge amount of publicity for its $250,000 contribution, though whether it’s a wise cause for it to be associated with remains to be seen. As for the role of NASA and space entrepreneur Peter Diamandis, I leave the last word to that ever-reliable source of technology news, The Register: “There will be the traditional strong friendship between IT/net/AI enthusiasm and space-o-philia. In keeping with the NASA setting, SU will have strong involvement from the International Space University. ISU, founded in 1987 by Diamandis and others, is seen as having been key to the vast strides humanity has made in space technology and exploration in the last two decades”

Happy New Year

A kind friend, who reads a lot more science fiction than I do, gave me a copy of Charles Stross’s novel Accelerando for Christmas, on the grounds that after all my pondering on the Singularity last year I ought to be up to speed with what he considers the definitive fictional treatment. I’ve nearly finished it, and I must say I especially enjoyed the role of the uploaded lobsters. But it did make me wonder what Stross’s own views about the singularity are these days. The answer is on his blog, in this entry from last summer: That old-time new-time religion. I’m glad to see that his views on nanotechnology are informed by such a reliable source.

A belated Happy New Year to my readers.

Will nanotechnology lead to a truly synthetic biology?

This piece was written in response to an invitation from the management consultants McKinsey to contribute to a forthcoming publication discussing the potential impacts of biotechnology in the coming century. This is the unedited version, which is quite a lot longer than the version that will be published.

The discovery of an alien form of life would be discovery of the century, with profound scientific and philosophical implications. Within the next fifty years, there’s a serious chance that we’ll make this discovery, not by finding life on a distant planet or indeed by such aliens visiting us on earth, but by creating this new form of life ourselves. This will be the logical conclusion of using the developing tools of nanotechnology to develop a “bottom-up” version of synthetic biology, which instead of rearranging and redesigning the existing components of “normal” biology, as currently popular visions of synthetic biology propose, uses the inspiration of biology to synthesise entirely novel systems.

Life on earth is characterised by a stupendous variety of external forms and ways of life. To us, it’s the differences between mammals like us and insects, trees and fungi that seem most obvious, while there’s a vast variety of other unfamiliar and invisible organisms that are outside our everyday experience. Yet, underneath all this variety there’s a common set of components that underlies all biology. There’s a common genetic code, based on the molecule DNA, and in the nanoscale machinery that underlies the operation of life, based on proteins, there are remarkable continuities between organisms that on the surface seem utterly different. That all life is based on the same type of molecular biology – with information stored in DNA, transcribed through RNA to be materialised in the form of machines and enzymes made out of proteins – reflects the fact that all the life we know about has evolved from a common ancestor. Alien life is a staple of science fiction, of course, and people have speculated for many years that if life evolved elsewhere it might well be based on an entirely different set of basic components. Do developments of nanotechnology and synthetic biology mean that we can go beyond speculation to experiment?

Certainly, the emerging discipline of synthetic biology is currently attracting excitement and foreboding in equal measure. It’s important to realise, though, that in the most extensively promoted visions of synthetic biology now, what’s proposed isn’t making entirely new kinds of life. Rather than aiming to make a new type of wholly synthetic alien life, what is proposed is to radically re-engineer existing life forms. In one vision, it is proposed to identify in living systems independent parts or modules, that could be reassembled to achieve new, radically modified organisms that can deliver some desired outcome, for example synthesising a particularly complicated molecule. In one important example of this approach, researchers at Lawrence Berkeley National Laboratory developed a strain of E. coli that synthesises a precursor to artmesinin, a potent (and expensive) anti-malarial drug. In a sense, this field is a reaction to the discovery that genetic modification of organisms is more difficult than previously thought; rather than being able to get what one wants from an organism by altering a single gene, one often needs to re-engineer entire regulatory and signalling pathways. In these complex processes, protein molecules – enzymes – essentially function as molecular switches, which respond to the presence of other molecules by initiating further chemical changes. It’s become commonplace to make analogies between these complex chemical networks and electronic circuits, and in this analogy this kind of synthetic biology can be thought of as the wholesale rewiring of the (biochemical) circuits which control the operation of an organism. The well-publicised proposals of Craig Venter are even more radical – their project is to create a single-celled organism that has been slimmed down to have only the minimal functions consistent with life, and then to replace its genetic material with a new, entirely artificial, genome created in the lab from synthetic DNA. The analogy used here is that one is “rebooting” the cell with a new “operating system”. Dramatic as this proposal sounds, though, the artificial life-form that would be created would still be based on the same biochemical components as natural life. It might be synthetic life, but it’s not alien.

So what would it take to make a synthetic life-form that was truly alien? In principle, it seems difficult to argue that this wouldn’t be possible in principle – as we learn more about the details of the way cell biology works, we can see that it is intricate and marvellous, but in no sense miraculous – it’s based on machinery that operates on principles consistent with the way we know physical laws operate on the nano-scale. These principles, it should be said, are very different to the ones that underlie the sorts of engineering we are used to on the macro-scale; nanotechnologists have a huge amount to learn from biology. But we are already seeing very crude examples of synthetic nanostructures and devices that use some of the design principles of biology – designed molecules that self-assemble to make molecular bags that resemble cell membranes; pores that open and close to let molecules in and out of these enclosures, molecules that recognise other molecules and respond by changes in shape. It’s quite conceivable to imagine these components being improved and integrated into systems. One could imagine a proto-cell, with pores controlling traffic of molecules in and out of it, containing an network of molecules and machines that together added up to a metabolism, taking in energy and chemicals from the environment and using them to make the components needed for the system to maintain itself, grow and perhaps reproduce.

Would such a proto-cell truly constitute an artificial alien-life form? The answer to this question, of course, depends on how we define life. But experimental progress in this direction will itself help answer this thorny question, or at least allow us to pose it more precisely. The fundamental problem we have when trying to talk about the properties of life in general, is that we only know about a single example. Only when we have some examples of alien life will it be possible to talk about the general laws, not of biology, but of all possible biologies. The quest to make artificial alien life will teach us much about the origins of our kind of life. Experimental research into the origins of life consists of an attempt to rerun the origins of our kind of life in the early history of earth, and is in effect an attempt to create artificial alien life from those molecules that can plausibly be argued to have been present on the early earth. Using nanotechnology to make a functioning proto-cell should be an easier task than this, as we don’t have to restrict ourselves to the kinds of materials that were naturally occurring on the early earth.

Creating artificial alien life would be a breathtaking piece of science, but it’s natural to ask whether it would have any practical use. The selling point of the most currently popular visions of synthetic biology is that they will permit us to do difficult chemical transformations in much more effective ways – making hydrogen from sunlight and water, for example, or making complex molecules for pharmaceutical uses. Conventional life, including the modifications proposed by synthetic biology, operates only in a restricted range of environments, so it’s possible to imagine that one could make a type of alien life that operated in quite different environments – at high temperatures, in liquid metals, for example – opening up entirely different types of chemistry. These utilitarian considerations, though, pale in comparison to what would be implied more broadly if we made a technology that had a life of its own.

Metamodern

Eric Drexler, the author of Nanosystems and Engines of Creation, launches his own blog today – Metamodern. The topics he’s covered so far include DNA nanotechnology and nanoplasmonics; these, to my mind, are a couple of the most exciting areas of modern nanoscience.

In the various debates about nanotechnology that have taken place over the years, not least on this blog, one sometimes has the sense that some of the people who presume to speak on behalf of Drexler and his ideas aren’t necessarily doing him any favours, so I’m looking forward to reading about what Drexler is thinking about now, directly from the source.

Nanotechnology and the singularitarians

A belief in the power and imminence of the Drexlerian vision of radical nanotechnology is part of the belief-package of adherents of the view that an acceleration of technology, linked particularly with the development of a recursively self-improving, super-human, artificial intelligence, will shortly lead to a moment of ineffably rapid technological and societal change – the Singularity. So it’s not surprising that my article in the IEEE Spectrum special issue on the Singularity – “Rupturing the Nanotech Rapture” – has generated some reaction amongst the singularitarians. The longest response has come from Michael Anissimov, whose blog Accelerating Future offers an articulate statement of the singularitarian case. Here are my thoughts on some of the issues he raises.

One feature of his response is his dissociation from some of the stronger claims of his fellow singularitarians. For example, he responds to the suggestion that MNT will allow any material or artefact – “a Stradivarius or a steak” – to be made in abundance, by suggesting that no-one thinks this anymore, and this is a “red herring” that has arisen from “journalists inaccurately summarizing the ideas of scientists”. On the contrary, this claim has been at the heart of the rhetoric surrounding MNT from the earliest writings of Drexler, who wrote in “Engines of Creation” “Because assemblers will let us place atoms in almost any reasonable arrangement , they will let us build almost anything that the laws of nature allow to exist.” Elsewhere, Anissimov distances himself from Kurzweil, who he includes in a group of futurists who “justifiably attract ridicule”.

This raises the question of who speaks for the singularitarians. As an author writing here for a publication with a fairly large circulation, it seems to me obvious that the authors whose arguments I need to address first are those whose books themselves command the largest circulation, because that’s where the readers are mostly going to have got their ideas about the singularity from. So, the first thing I did when I got this assignment was to read Kurzweil’s bestseller “The Singularity is Near”; after all, it’s Kurzweil who is able to command articles in major newspapers and is about to release a film. More specific to MNT, Drexler’s “Engines of Creation” obviously has to be a major point of reference, together with more recent books like Josh Hall’s “Nanofuture”. For the technical details of MNT, Drexler’s “Nanosystems” is the key text. It may well be that Michael and his associates have more sophisticated ideas about MNT and the singularity, but while these ideas remain confined to discussions on singularitarian blogs and email lists, they aren’t realistically going to attract the attention that people like Kurzweil do, and its appropriate that the publicly prominent faces of singularitarianism should attract the efforts of those arguing against the notion.

A second theme of Michael’s response is the contention that the research that will lead to MNT is happening anyway. It’s certainly true that there are many exciting developments going on in nanotechnology laboratories around the world. What’s at issue, though, is what direction these developments are taking us. GIven the tendencies of singularitarians towards technological determinism, it’s a natural tendency to assume that all these exciting developments are all milestones on the way to a nano-assembler, and that progress towards the singularity can be measured by the weight of press releases flowing from the press offices of universities and research labs around the world. The crucial point, though, is that there’s no force driving technology towards MNT. Yes, technology is moving forward, but the road it’s taking is not the one anticipated by MNT proponents. It’s not clear to me that Michael has understood my central argument – it’s true that biology offers an existence proof for advanced nanotechnological devices of one kind or another – as Michael says, “Obviously, a huge number of biological entities, from molecule-sized to cell-sized, regularly traverse the body and perform a wide variety of essential functions, so we know such a thing is possible in principle.” But, this doesn’t allow us conclude that nanorobots built on the mechanical engineering principles of MNT will be possible, because the biological machines work on entirely different principles. The difficulties I outline for MNT that arise as a result of the different physics of the nanoscale are not difficulties for biological nanotechnology, because its very different operating principles exploit this different physics rather than trying to engineer round it.

What’s measured by all these press releases, then, is progress towards a whole variety of technological goals, many very different from the goals envisaged for MNT, and each of whose feasibility at the present time we simply don’t know. I’ve given my arguments as to why MNT actually looks less likely now than it did ten years ago, and Michael isn’t able to counter these arguments other than by saying that “Of course, all of these challenges were taken into account in the first serious study of the feasibility of nanoscale robotic systems, titled Nanosystems…. We’ll need to build nanomachines using nanomechanical principles, not naive reapplications of macroscale engineering principles.” But Nanosystems is all about applying macroscale engineering principles – right at the outset it states that “molecular manufacturing applies the principles of mechanical engineering to chemistry.” Instead of work directed towards MNT, we’re now seeing other goals being pursued – goals like quantum computing, DNA based nanomachines, a path from plastic electronics to ultracheap computing and molecular electronics, breakthroughs in nanomedicine, optical metamaterials. Far from being incremental updates, many of these research directions hadn’t even been conceived when Drexler wrote “Engines of Creation”, and, unlike the mechanical engineering paradigm, these all really do exploit the different and unfamiliar physics of the nanoscale. All these are being actively researched now, but not all of them will pan out and other entirely unforeseen technologies will be discovered and get people excited anew.

Ultimately, Michael’s arguments boil down to a concatenation of ever-hopeful “ifs” and “ands”. In answer to my suggestion that, if MNT like processes could only be got to work at low temperatures and ultra-high vacua, Michael says “If the machines used to maintain high vacuum and extreme refrigeration could be manufactured for the cost of raw materials, and energy can be obtained in great abundance from nano-manufactured, durable, self-cleaning solar panels, I am skeptical that this would be as substantial of a barrier as it is to similar high-requirement processes today.” I think there’s a misunderstanding of economics here. Things can only be manufactured for the cost of their raw materials if the capital cost of the manufacturing machinery is very small. But this capital cost itself mostly reflects the amortization of the research and development costs of developing the necessary plant and equipment. What we’ve learnt from the semiconductor industry is that as technology progresses these capital costs become larger and larger and more and more dominating for the economics of the industry. It’s difficult to see what can reverse this trend without invoking a deus ex machina. Ultimately, it’s just such an invocation that arguments for the singularity seem in the end to reduce to.