The use of nanotechnology in the food industry seems to be creeping up the media agenda at the moment. The Times on Saturday published an extended article by Vivienne Parry in its “Body and Soul” supplement, called Food fight on a tiny scale. As the title indicates, the piece is framed around the idea that we are about to see a rerun of the battles about genetic modification of food in the new context of nano-engineered foodstuffs. Another article appeared in the New York Times a few weeks ago: Risks of engineering a better ice cream.

Actually, apart from the rather overdone references to a potential consumer backlash, both articles are fairly well-informed. The body of Vivienne Parry’s piece, in particular, makes it clear why nanotechnology in food presents a confusingly indistinct and diffuse target. Applications in packaging, for example in improving the resistance of plastic bottles to gas permeation, are already with us and are relatively uncontroversial. Longer ranged visions of “smart packaging” also offer potential consumer benefits, but may have downsides yet to be fully explored. More controversial, potentially, is the question of the addition of nanoscaled ingredients to food itself.

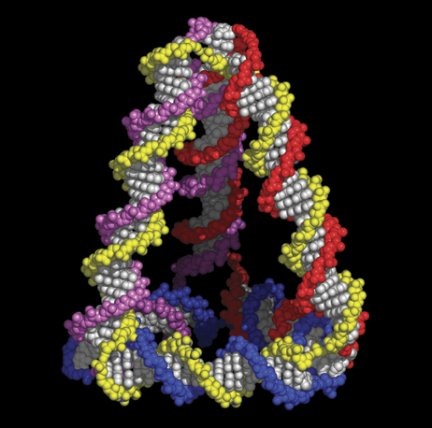

But this issue is very problematic, simply because so much of food is made up of components which are naturally nanoscaled, and much of traditional cooking and food processing consists of manipulating this nanoscale structure. To give just one example, the traditional process of making whey cheeses like ricotta consists of persuading whey proteins like beta-lactoglobulin to form nanoparticles each containing a small number of molecules, and then getting those nanoparticles to aggregate in an open, gel structure, giving the cheese its characteristic mechanical properties. The first example in the NY Times article – controlling the fat particle size in ice cream to get richer feeling low fat ice cream – is best understood as simply an incremental development of conventional food science, which uses the instrumentation and methodology of nanoscience to better understand and control food nanostructure.

There is, perhaps, more apparent ground for concern with food additives that are prepared in a nanoscaled form and directly added to foods. The kinds of molecules we are talking about here are molecules which add colour, flavour and aroma, and increasingly molecules which seem to confer some kind of health benefit. One example of this kind of thing is the substance lycopene, which is available from the chemical firm BASF as a dispersion of particles which are a few hundred nanometers in size. Lycopene is the naturally occurring dye molecule that makes tomatoes red, for which there is increasing evidence of health benefits (hence the unlikely sounding claim that tomato ketchup is good for you). Like many other food component molecules, it is not soluble in water, but it is soluble in fat (as anyone who has cooked an olive oil or butter based tomato sauce will know). Hence, if one wants to add it to a water based product, like a drink, one needs to disperse it very finely for it to be available to be digested.

One can expect, then, more products of this kind, in which a nanoscaled preparation is used to deliver a water or oil soluble ingredient, often of natural origin, which on being swallowed will be processed by the digestive system in the normal way. What about the engineered nanoparticles, that are soluble in neither oil nor water, that have raised toxicity concerns in other contexts? These are typically inorganic materials, like carbon in its fullerene forms, or titanium dioxide, as used in sunscreen, or silica. Some of these inorganic materials are used in the form of micron scale particles as food additives. It is conceivable (though I don’t know of any examples) that nanoscaled versions might be used in food, and that these might fall within a regulatory gap in the current legal framework. I talked about the regulatory implications of this, in the UK, a few months ago in the context of a consultation document issued by the UK’s Food Standards Agency. The most recent research report from the UK government’s Nanotechnology Research Coordination Group reveals that the FSA has commissioned a couple of pieces of research about this, but the FSA informs me that it’s too early to say much about what these projects have found.

I’m guessing that the media interest in this area has arisen largely from some promotional activity from the nanobusiness end of things. The consultancy Cientifica recently released a report, Nanotechnologies in the food industry, and there’s a conference in Amsterdam this week on Nano and Microtechnologies in the

Food and Healthfood Industries.

I’m on my way to London right now, to take part in a press briefing on Nanotechnology in Food at the Science Media Centre. My family seems to be interacting a lot with the press at the moment, but I don’t suppose I’ll do as well as my wife, whose activities last week provoked this classic local newspaper headline in the Derbyshire Times: School Axe Threat Fury. And people complain about scientific writing being too fond of stacked nouns.