80% of our energy comes from burning fossil fuels, and that needs to change, fast. By the middle of this century we need to be approaching net zero carbon emissions, if the risk of major disruption from climate change is to be lowered – and the middle of this century is not very far away, when measured in terms of the lifetime of our energy infrastructure.

My last post – If new nuclear doesn’t get built, it will be fossil fuels, not renewables, that fill the gap – tried to quantify the scale of the problem – all our impressive recent progress in implementing wind and solar energy will be wiped out by the loss of 60 TWh/ year of low-carbon energy that will happen over the next decade as the UK’s fleet of Advanced Gas Cooled Reactors are retired, and even with the most optimistic projections for the growth of wind and solar, without new nuclear build the prospect of decarbonising our electricity supply remains distant. And, above all, we always need to remember that the biggest part of our energy consumption comes from directly burning oil and gas – for transport, industry and domestic heating – and this needs to be replaced by more low carbon electricity. We need more nuclear energy.

The UK’s current nuclear new build plans are in deep trouble

All but one of our existing nuclear power stations will be shut down by 2030 – only the Pressurised Water Reactor at Sizewell B, rated at 1.2 GW will remain. So, without any new nuclear power stations opening, around 60 TWh a year of low carbon energy will be lost. What is the current status of our nuclear new build program? Here’s where we are now:

So this leaves us with three scenarios for the post-2030 period.

We can, I think, assume that Hinkley C is definitely happening – if that is the limit of our expansion of nuclear power, we’ll end up with about 24 TWh a year of low carbon electricity from nuclear, less than half the current amount.

With Sizewell C and Bradwell B, which are currently proceeding, though not yet finalised, we’ll have 78 TWh a year – this essentially replaces the lost capacity from our AGR fleet, with a small additional margin.

Only with the currently suspended projects – at Wylfa, Oldbury, and Moorside, would we be substantially increasing nuclear’s contribution to low carbon electricity, roughly doubling the current contribution at 143 TWh per year.

Transforming the economics of nuclear power

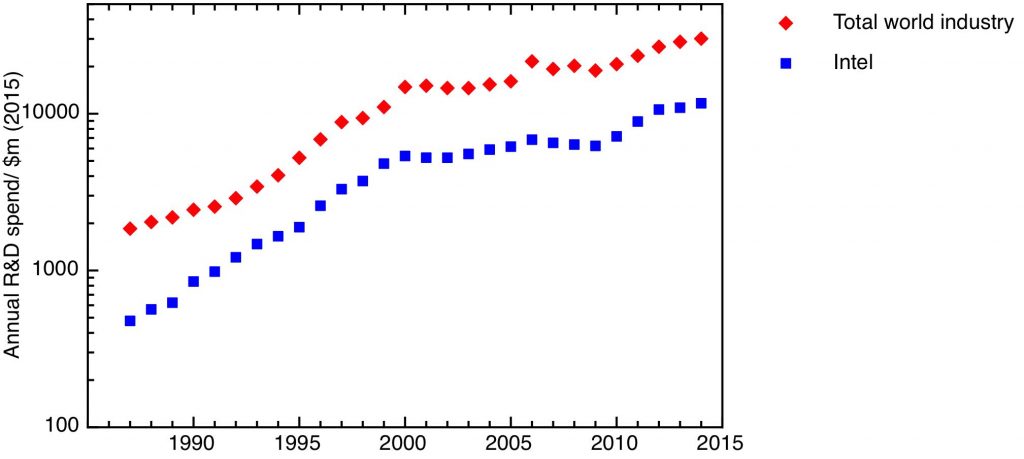

Why is nuclear power so expensive – and how can it be made cheaper? What’s important to understand about nuclear power is that its costs are dominated by the upfront capital cost of building a nuclear power plant, together with the provision that has to be made for safely decommissioning the plant at the end of its life. The actual cost of running it – including the cost of the nuclear fuel – is, by comparison, quite small.

Let’s illustrate this with some rough indicative figures. The capital cost of Hinkley C is about £20 billion, and the cost of decommissioning it at the end of its 60 year expected lifespan is £8 billion. For the investors to receive a guaranteed return of 9%, the plant has to generate a cashflow of £1.8 billion a year to cover the cost of capital. If the plant is able to operate at 90% capacity, this amounts to about £72 a MWh of electricity produced. If one adds on the recurrent costs – for operation and maintenance, and the fuel cycle – of about £20 a MWh, this gets one to the so-called “strike price” – which in the terms of the deal with the UK government the project has been guaranteed – of £92 a MWh.

Two things come out from this calculation – firstly, this cost of electricity is substantially more expensive than the current wholesale price (about £62 per MWh, averaged over the last year). Secondly, nearly 80% of the price covers the cost of borrowing the capital – and 9% seems like quite a high rate at a time of historically low long-term interest rates.

EDF itself can borrow money on the bond market for 5%. At 5%, the cost of financing the capital comes to about £1.1 billion a year, which would be achieved at an electricity price of a bit more than £60 a MWh. Why the difference? In effect, the project’s investors – the French state owned company EDF, with a 2/3 stake, the rest being held by the Chinese state owned company CGN – receive about £700 million a year to compensate them for the risks of the project.

Of course, the UK state itself could have borrowed the money to finance the project. Currently, the UK government can borrow at 1.75% fixed for 30 years. At 2%, the financing costs would come down from £1.8 billion a year to £0.7 billion a year, requiring a break-even electricity price of less than £50 a MWh. Of course, this requires the UK government to bear all the risk for the project, and this comes at a price. It’s difficult to imagine that that price is more than £1 billion a year, though.

If part of the problem of the high cost of nuclear energy comes from the high cost of capital baked into the sub-optimal way the Hinkley Point deal has been structured, it remains the case that the capital cost of the plant in the first place seems very high. The £20 billion cost of Hinkley Point is indeed high, both in comparison to the cost of previous generations of nuclear power stations, and in comparison with comparable nuclear power stations built recently elsewhere in the world.

Sizewell B cost £2 billion at 1987 prices for 1.2 GW of capacity – scaling that up to 3.2 GW and putting it in current money suggests that Hinkley C should cost about £12 billion.

Some of the additional cost can undoubtedly be ascribed to the new safety features added to the EPR. The EPR is an evolution of the original pressurised water reactor design; all pressurised water reactors – indeed all light water reactors (which use ordinary, non-deuterated, water as both moderator and coolant) – are susceptible to “loss of coolant accidents”. In one of these, if the circulating water is lost, even though the nuclear reaction can be reliably shut down, the residual heat from the radioactive material in the core can be great enough to melt the reactor core, and to lead to steam reacting with metals to create explosive hydrogen.

The experience of loss of coolant accidents at Three Mile Island and (more seriously) Fukushima has prompted new so-called generation III or gen III+ reactors to incorporate a variety of new features to mitigate potential loss-of-coolant accidents, including methods for passive backup cooling systems and more layers of containment. The experience of 9/11 has also prompted designs to consider the effect of a deliberate aircraft crash into the building. All these extra measures cost money.

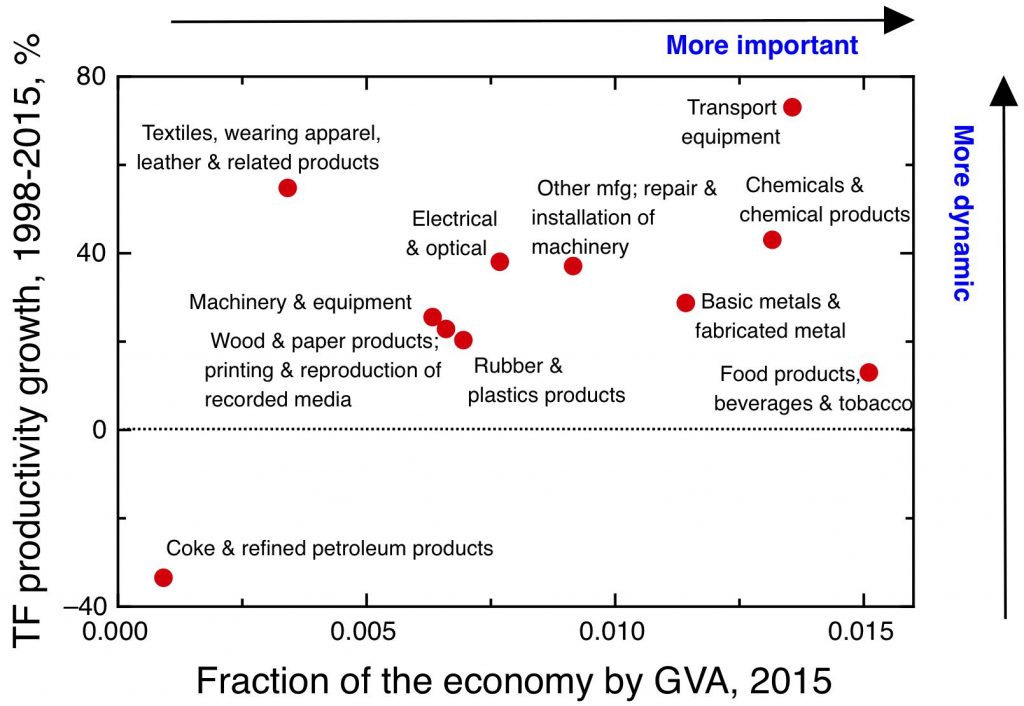

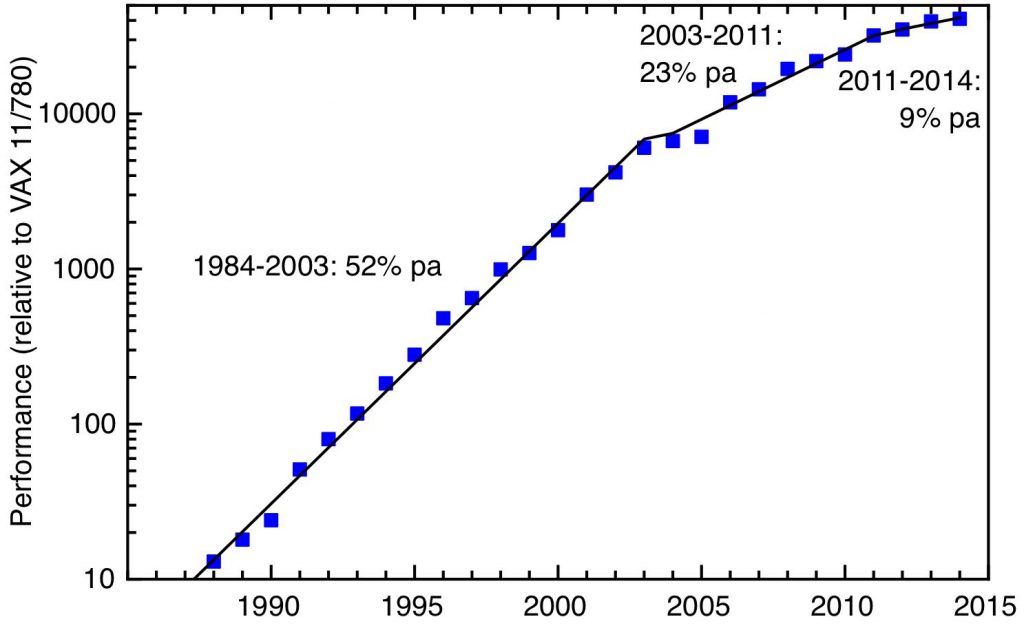

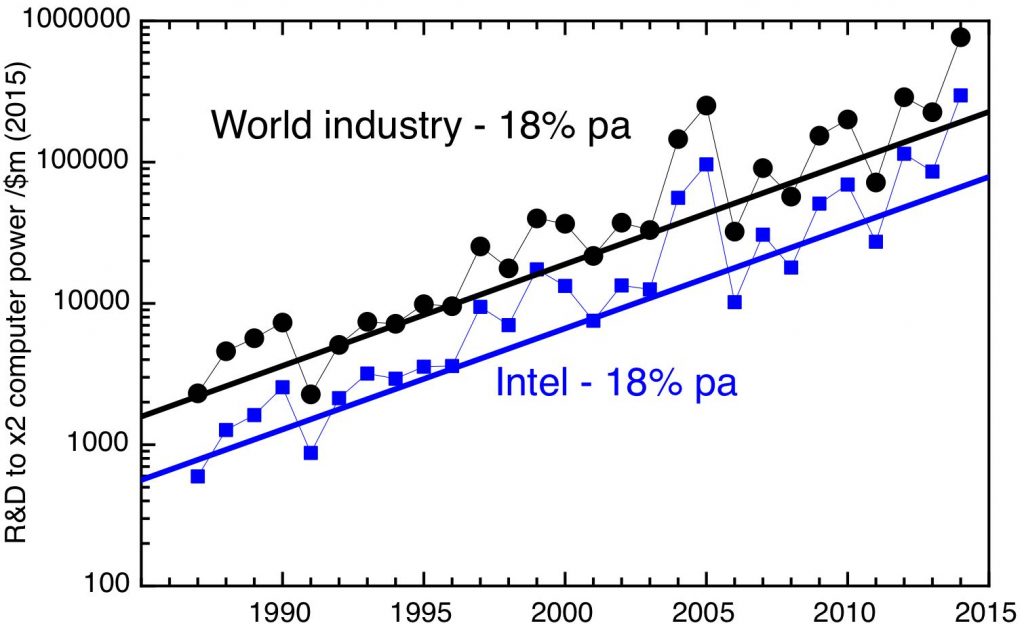

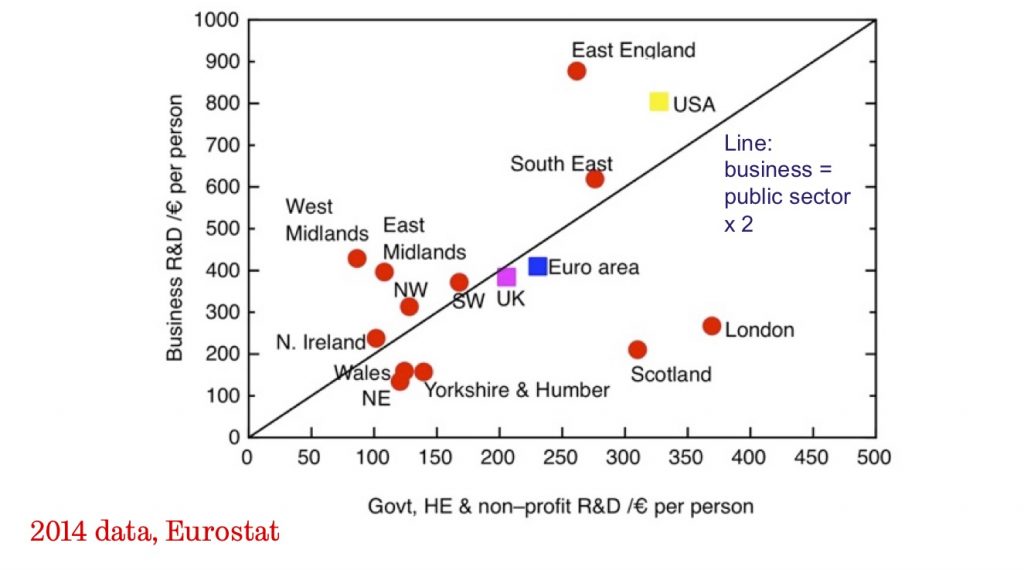

But even nuclear power plants of the same design cost significantly more to build in Europe and the USA than they do in China or Korea – more than twice as much, in fact. Part of this is undoubtedly due to higher labour costs (including both construction workers and engineers and other professionals). But there are factors leading to these other countries’ lower costs that can be emulated in the UK – they arise from the fact that both China and Korea have systematically got better at building reactors by building a sequence of them, and capturing the lessons learnt from successive builds.

In the UK, by contrast, no nuclear power station has been built since 1995, so in terms of experience we’re starting from scratch. And our programme of nuclear new build could hardly have been designed in a way that made it more difficult to capture these benefits of learning, with four quite different designs being built by four different sets of contractors.

We can learn the lessons of previous experiences of nuclear builds. The previous EPR installations in Olkiluoto, Finland, and Flamanville, France – both of which have ended up hugely over-budget and late – indicate what mistakes we should avoid, while the Korean programme – which is to-date the only significant nuclear build-out to significantly reduce capital costs over the course of the programme – offers some more positive lessons. To summarise –

The last point supports the more radical idea of making the entire reactor in a factory rather than on-site. This has the advantage of ensuring that all the benefits of learning-by-doing are fully captured, and allows much closer control over quality, while making easier the kind of process innovation that can make significant reductions in manufacturing cost.

The downside is that this kind of modular manufacturing is only possible for reactors on a considerably smaller scale than the >1 GW capacity units that conventional programmes install – these “Small Modular Reactors” – SMRs – will be in the range of 10’s to 100’s MW. The driving force for increasing the scale of reactor units has been to capture economies of scale in running costs and fuel efficiencies. SMRs will sacrifice some of these economies of scale, with the promise of compensating economies of learning that will drive down capital costs enough to compensate. Given that, for current large scale designs, the total cost of electricity is dominated by the cost of capital, this is an argument that is at least plausible.

What the UK should do to reboot its nuclear new build programme

If the UK is to stand any chance at all of reducing its net carbon emissions close to zero by the middle of the century, it needs both to accelerate offshore wind and solar, and get its nuclear new build programme back on track.

It was always a very bad idea to try and implement a nuclear new build programme with more than one reactor type. Now that the Hinkley Point C project is underway, our choice of large reactor design has in effect been made – it is the Areva EPR.

The EPR is undoubtedly a complex and expensive design, but I don’t think there is any evidence that it is fundamentally different in this from other Gen III+ designs. Recent experience of building the rival Westinghouse AP1000 design in the USA doesn’t seem to be any more encouraging. On the other hand, the suggestion of some critics that the EPR is fundamentally “unbuildable” has clearly been falsified by the successful completion of an EPR unit in Taishan, China – this was connected to the grid in December last year. The successful building of both EPRs and AP1000s in China suggest, rather, that the difficulties seen in Europe and the USA arise from systematic problems of the kind discussed in the last section rather than a fundamental flaw in any particular reactor design.

The UK should therefore do everything to accelerate the Sizewell C project, where two more EPRs are scheduled to be built. This needs to happen on a timescale that ensure that there is continuity between the construction of Hinkley C and Sizewell C, to retain the skills and supply chains that are developed and to make sure all the lessons learnt in the Hinkley build are acted on. And it should be financed in a way that’s less insanely expensive than the arrangements for Hinkley Point C, accepting the inevitability that the UK government will need to take a considerable stake in the project.

In an ideal world, every other large nuclear reactor built in the UK in the current programme should also be an EPR. But a previous government apparently made a commitment to the Chinese state-owned enterprise CGN that, in return for taking a financial stake in the Hinkley project, it should be allowed to build a nuclear power station at Bradwell, in Essex, using the Chinese CGN HPR1000 design. I think it was a bad idea on principle to allow a foreign government to have such close control of critical national infrastructure, but if this decision has to stand, one can find silver linings. We should respect and learn from the real achievements of the Chinese in developing their own civil nuclear programme. If the primary motivation of CGN in wanting to build an HPR1000 is to improve its export potential by demonstrating its compliance with the UK’s independent and rigorous nuclear regulations, then that goal should be supported.

We should speed up replacement plans to develop the other three sites – Wylfa, Oldbury and Moorside. The Wylfa project was the furthest advanced, and a replacement scheme based on installing two further EPR units there should be put together to begin shortly after the Sizewell C project, designed explicitly to drive further savings in capital costs by maximising learning by doing.

The EPR is not a perfect technology, but we can’t afford to wait for a better one – the urgency of climate change means that we have to start building right now. But that doesn’t mean we should accept that no further technological progress is possible. We have to be clear about the timescales, though. We need a technology that is capable of deployment right now – and for all the reasons given above, that should be the EPR – but we need to be pursuing future technologies both at the demonstration stage, and at the earlier stages of research and development. Technologies ready for demonstration now might be deployed in the 2030’s, while anything that’s still in the R&D stage now realistically is not likely to be ready to be deployed until 2040 or so.

The key candidate for a demonstration technology is a light water small modular reactor. The UK government has been toying with the idea of small modular reactors since 2015, and now a consortium led by Rolls-Royce has developed a design for a modular 400 MW pressurised water reactor, with an ambition to enter the generic design approval process in 2019 and to complete a first of a kind installation by 2030.

As I discussed above, I think the arguments for small modular are at the very least plausible, but we won’t know for sure how the economics work out until we try to build one. Here the government needs to play the important role of being a lead customer and commission an experimental installation (perhaps at Moorside?).

The first light water power reactors came into operation in 1960 and current designs are direct descendents of these early precursors; light water reactors have a number of sub-optimal features that are inherent to the basic design, so this is an instructive example of technological lock-in keeping us on a less-than-ideal technological trajectory.

There are plenty of ideas for fission reactors that operate on different principles – high temperature gas cooled reactors, liquid salt cooled reactors, molten salt fuelled reactors, sodium fast reactors, to give just a few examples. These concepts have many potential advantages over the dominant light water reactor paradigm. Some should be intrinsically safer than light water reactors, relying less on active safety systems and more on an intrinsically fail-safe design. Many promise better nuclear fuel economy, including the possibility of breeding fissile fuel from non-fissile elements such as thorium. Most would operate at higher temperatures, allowing higher conversion efficiencies and the possibility of using the heat directly to drive industrial processes such as the production of hydrogen.

But these concepts are as yet undeveloped, and it will produce many years and much money to convert them into working demonstrators. What should the UK’s role in this R&D effort be? I think we need to accept the fact that our nuclear fission R&D effort has been so far run down that it is not realistic to imagine that the UK can operate independently – instead we should contribute to international collaborations. How best to do that is a big subject beyond the scope of this post.

There are no easy options left

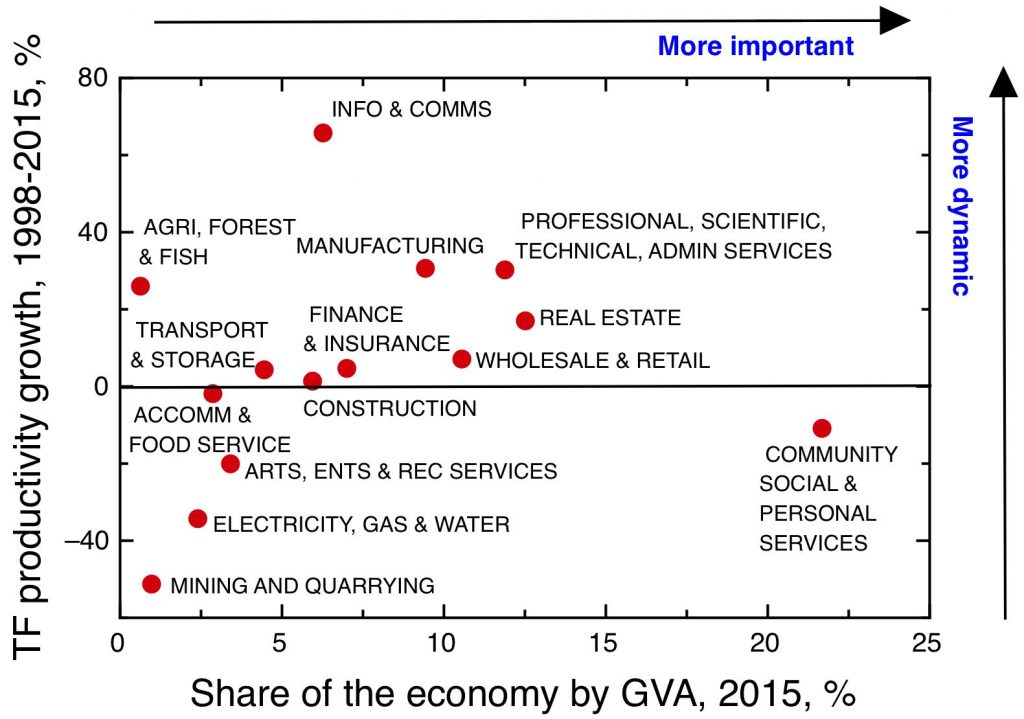

Climate change is an emergency, yet I don’t think enough people understand how difficult the necessary response – deep decarbonisation of our energy systems – will be. The UK has achieved some success in lowering the carbon intensity of its economy. Part of this has come from, in effect, offshoring our heavy industry. More real gains have come from switching electricity generation from coal to gas, while renewables – particularly offshore wind and solar – have seen impressive growth.

But this has been the easy part. The transition from coal to gas is almost complete, and the ambitious planned build-out of offshore wind to 2030 will have occupied a significant fraction of the available shallow water sites. Completing the decarbonisation of our electricity sector without nuclear new build will be very difficult – but even if that is achieved, that doesn’t even bring us halfway to the goal of decarbonising our energy economy. 60% of our current energy consumption comes from directly burning oil – for cars and trucks – and gas – for industry and heating our homes – much of this will need to be replaced by low-carbon energy, meaning that our electricity sector will have to be substantially increased.

Other alternative low carbon energy sources are unpalatable or unproven. Carbon capture and storage has never yet deployed at scale, and represents a pure overhead on existing power generation technologies, needing both a major new infrastructure to be built and increased running costs. Scenarios that keep global warming below 2° C need so called “negative emissions technologies” – which don’t yet exist, and make no economic sense without a degree of worldwide cooperation which seems difficult to imagine at the moment.

I understand why people are opposed to nuclear power – civil nuclear power has a troubled history, which reflect its roots in the military technologies of nuclear weapons, as I’ve discussed before. But time is running out, and the necessary transition to a zero carbon energy economy leaves us with no easy options. We must accelerate the deployment of renewable energies like wind and solar, but at the same time move beyond nuclear’s troubled history and reboot our nuclear new build programme.

Notes on sources

For an excellent overall summary of the mess that is the UK’s current new build programme, see this piece by energy economist Dieter Helm. For the specific shortcomings of the Hinkley Point C deal, see this National Audit Office report (and at the risk of saying, I told you so, this is what I wrote 5 years ago: The UK’s nuclear new build: too expensive, too late). For the lessons to be learnt from previous nuclear programmes, see Nuclear Lessons Learnt, from the Royal Academy of Engineering. This MIT report – The Future of Nuclear in a carbon constrained world – has much useful to say about the economics of nuclear power now and about the prospects for new reactor types. For the need for negative emissions technologies in scenarios that keep global warming below 2° C, see Gasser et al.