It’s excellent news that the UK government has accepted the Climate Change Committee’s recommendation to legislate for a goal of achieving net zero greenhouse emissions by 2050. As always, though, it’s not enough to will the end without attending to the means. My earlier blogpost stressed how hard this goal is going to be to reach in practise. The Climate Change Committee does provide scenarios for achieving net zero, and the bad news is that the central 2050 scenario relies to a huge extent on carbon capture and storage. In other words, it assumes that we will still be burning fossil fuels, but we will be mitigating the effect of this continued dependence on fossil fuels by capturing the carbon dioxide released when gas is burnt and storing it, into the indefinite future, underground. Some use of carbon capture and storage is probably inevitable, but in my view such large-scale reliance on it is, politically and economically, a bad idea.

In the central 2050 net zero scenario, 645 TWh of electricity is generated a year – more than doubled from 2017 value of 300 TWh, reflecting the electrification of sectors like transport. The basic strategy for deep decarbonisation has to be, as a first approximation, to electrify everything, while simultaneously decarbonising power generation: so far, so good.

But even with aggressive expansion of renewable electricity, this scenario still calls for 150 TWh to be generated from fossil fuels, in the form of gas power stations. To achieve zero carbon emissions from this fossil fuel powered electricity generation, the carbon dioxide released when the gas is burnt has to be captured at the power stations and pumped through a specially built infrastructure of pipes to disused gas fields in the North Sea, where it is injected underground for indefinite storage. This is certainly technically feasible – to produce 150 TWh of electricity from gas, around 176 million tonnes of carbon dioxide a year will be produced. For comparison currently about 42 million tonnes of natural gas a year is taken out of the North Sea reservoirs, so reversing the process at four times the scale is undoubtedly doable.

In fact, more carbon capture and storage will be needed than the 176 million tonnes from the power sector, because the zero net greenhouse gas plan relies on it in four distinct ways. In addition to allowing us to carry on burning gas to make electricity, the plan envisages capturing carbon dioxide from biomass-fired power stations too. This should lead to a net lowering of the amount of carbon dioxide in the atmosphere, amounting to a so-called “negative emissions technology”. The idea of these is one offsets the remaining positive carbon emissions from hard to decarbonise sectors like aviation with these “negative emissions” to achieve overall net zero emissions.

Meanwhile the plan envisages the large scale conversion of natural gas to hydrogen, to replace natural gas in industry and domestic heating. One molecule of methane produces two molecules of hydrogen, which can be burnt in domestic boilers without carbon emissions, and one of carbon dioxide, which needs to be captured at the hydrogen plant and pumped away to the North Sea reservoirs. Finally some carbon dioxide producing industrial processes will remain – steel making and cement production – and carbon capture and storage will be needed to render these processes zero carbon. These latter uses are probably inevitable.

But I want to focus on the principal envisaged use of carbon capture and storage – as a way of avoiding the need to move to entirely low carbon electricity, i.e. through renewables like wind and solar, and through nuclear power. We need to take a global perspective – if the UK achieves net zero greenhouse gas status by 2050, but the rest of the world carries on as normal, that helps no-one.

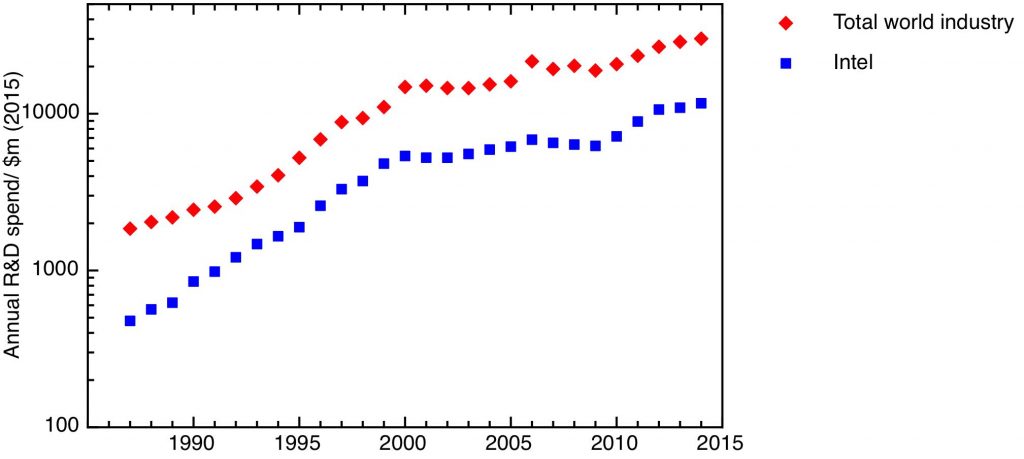

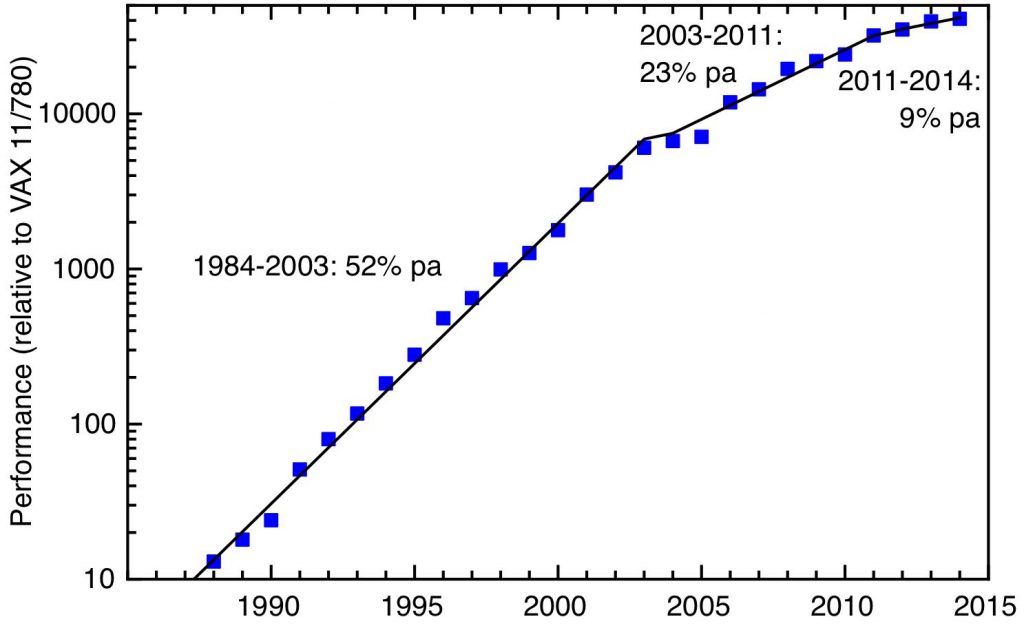

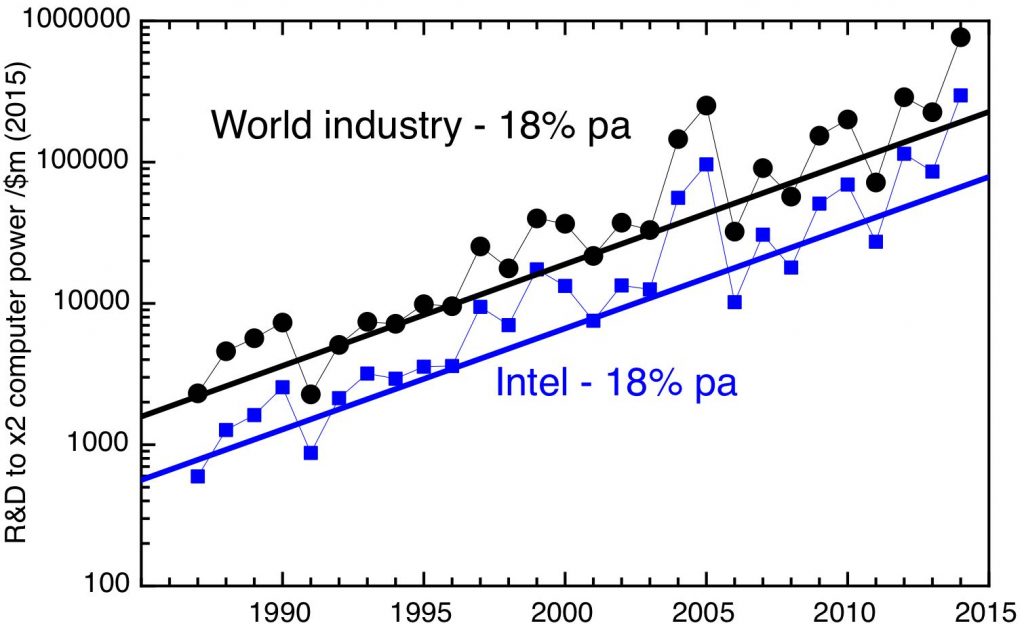

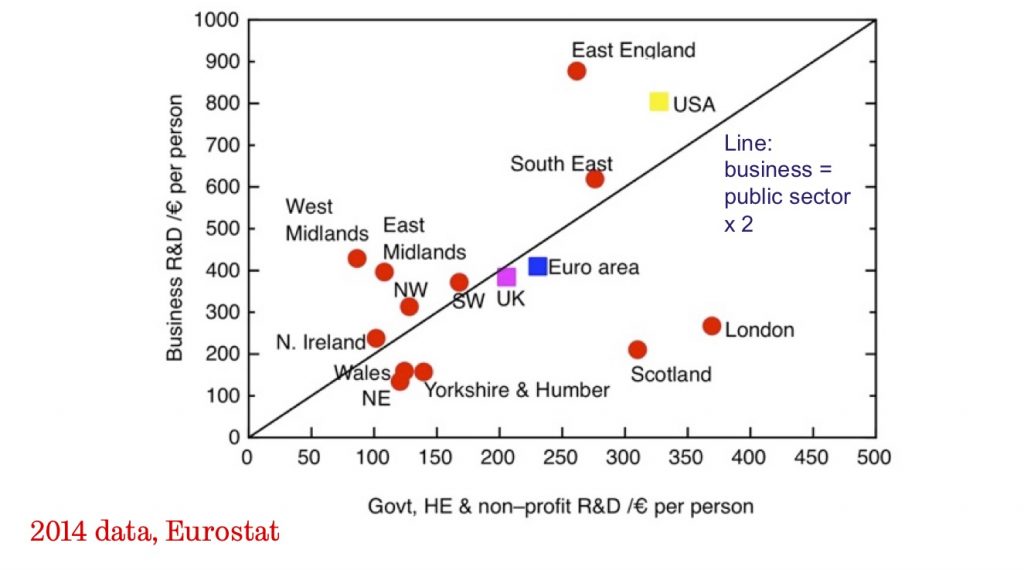

In my opinion, the only way we can be sure that the whole world will decarbonise is if low carbon energy – primarily wind, solar and nuclear – comes in at a lower cost than fossil fuels, without subsidies or other intervention. The cost of these technologies will surely come down: for this to happen, we need both to deploy them in their current form, and to do research and development to improve them. We need both the “learning by doing” that comes from implementation, and the cost reductions that will come from R&D, whether that’s making incremental process improvements to the technologies as they currently stand, or developing radically new and better versions of these technologies.

But we will never achieve these technological improvements and corresponding cost reductions for carbon capture and storage.

It’s always tempting fate to say “never” for the potential for new technologies – but there’s one exception, and that’s when a putative new technology would need to break one of the laws of thermodynamics. No-one has ever come out ahead betting against these.

To do carbon capture and storage will always need additional expenditure over and above the cost of an unabated gas power station. It needs both:

The latter is an inescapable consequence of the second law of thermodynamics – carbon capture will always need a separation step. Either one needs to take air and separate it into its component parts, taking out the pure oxygen, so one burns gas to produce a pure waste stream consisting of carbon dioxide and water. Or one has to take the exhaust from burning the gas in air, and pull out the carbon dioxide from the waste. Either way, you need to take a mixed gas and separate its components – and that always takes an energy input to drive the loss of entropy that follows from separating a mixture.

The key point, then, is that no matter how much better our technology gets, power produced by a gas power station with carbon capture and storage will always be more expensive that power from unabated gas. The capital cost of the plant will be greater, and so will the revenue cost per kWh. No amount of technological progress can ever change this.

So there can only be a business case for carbon capture and storage through significant government interventions in the market, either through a subsidy, or through a carbon tax. Politically, this is an inherently unstable situation. Even after the capital cost of the carbon capture infrastructure has been written off, at any time the plant operator will be able to generate electricity more cheaply by releasing the carbon dioxide produced when the gas is burnt. Taking an international perspective, this leads to a massive free rider problem. Any country will be able to gain a competitive advantage at any time by turning the carbon capture off – there needs to be a fully enforced international agreement to impose carbon taxes at a high enough level to make the economics work. I’m not confident that such an agreement – which would have to cover every country making a significant contribution to carbon emissions to be effective – can be relied to hold on the scale of many decades.

I do accept that some carbon and capture and storage probably is essential, to capture emissions from cement and steel production. But carbon capture and storage from the power sector is a climate change solution for a world that does not exist any more – a world of multilateral agreements and transnational economic rationality. Any scenario that relies on carbon capture and storage is just a politically very risky way of persuading ourselves that fossil-fuelled business as usual is sustainable, and postponing the necessary large scale implementation and improvement through R&D of genuine low carbon energy technologies – renewables like wind and solar, and nuclear.