In the first part of this series attempting to sum up the issues facing UK science and innovation policy, I tried to set the context by laying out the wider challenges the UK government faces, asking what problems we need our science and innovation system to contribute to solving.

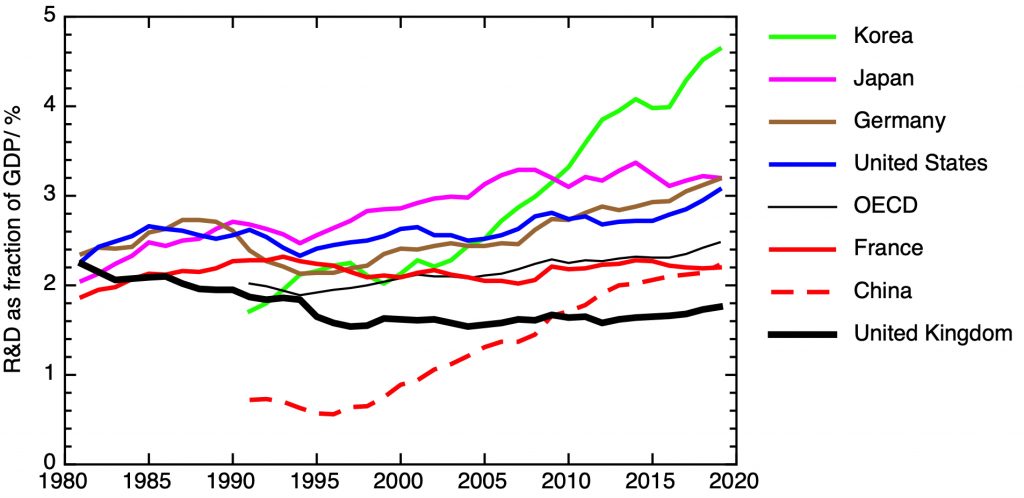

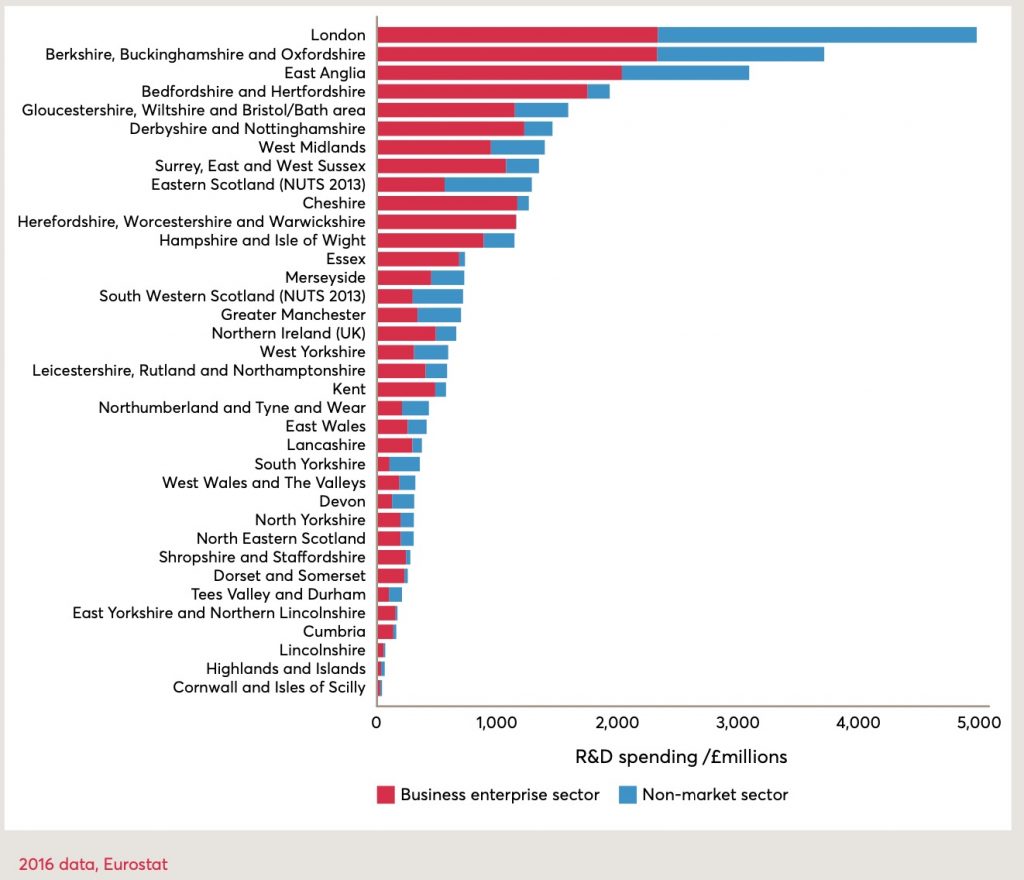

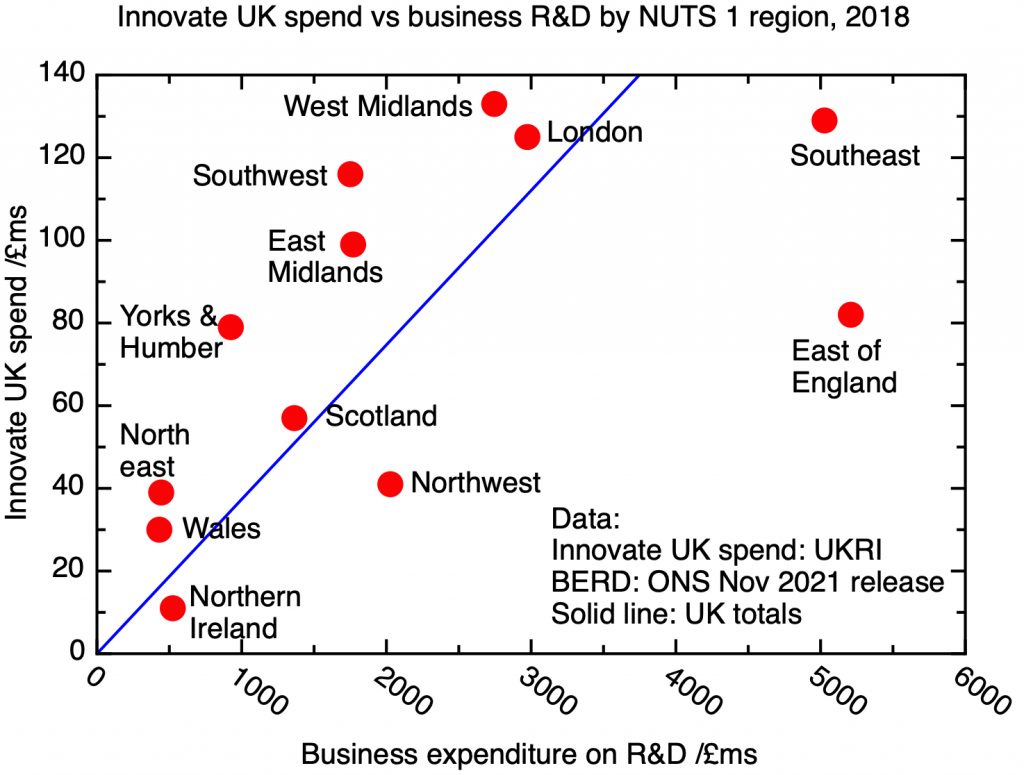

In the second part of the series, I posed some of the big questions about how the UK’s science and innovation system works, considering how R&D intensive the UK economy should be, the balance between basic and applied research, and the geographical distribution of R&D.

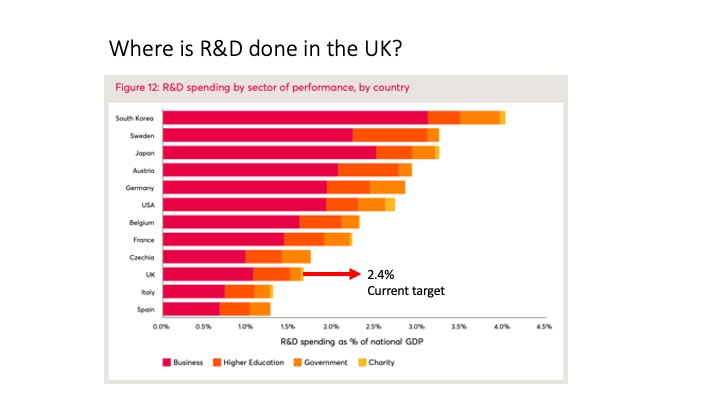

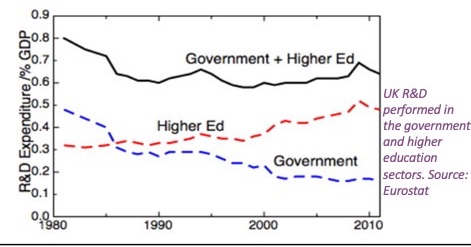

In the third part, I discussed the institutional landscape of R&D in the UK, looking at where R&D gets done in the UK.

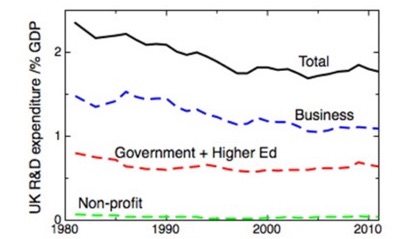

In this part, looking at the funding system, I consider who pays for R&D, and how decisions are made about what R&D to do.

4.1. The broad flows of research funding in the UK

The flow diagram I reproduced in my last post summarises the overall way in which R&D is paid for in the UK. In 2019, total spending on R&D was £38.5 billion. The largest single contribution to this was from business, which spent £20.7 billion, mostly on R&D carried out in the business sector.

The government spent £10.4 billion, including £1.8 billion to support R&D in industry, £6 billion on university R&D, and £2.3 billion in its own laboratories.

Overseas sources of funding accounted for £5.5 billion. £1.5 billion of this overseas money went into universities; of this, I estimate around half of this came from the EU (and is thus properly thought of as originally coming from the UK government), with the rest from overseas companies, charities and other governments.

Finally, £1.8 billion was spent by the non-profit sector, dominated by the Wellcome Trust and medical research charities such as CRUK.

It’s worth adding two glosses to these official figures. Firstly, businesses receive a substantial subsidy for their R&D spending through the mechanism of R&D tax credits. These were worth £7.4 billion in FY 19/20. Although there isn’t an exact alignment between the R&D tax credit statistics and the Business R&D statistics, we can estimate, putting together the cost of tax credits and direct government funding of industry research, that roughly 35% of business spending on R&D is ultimately paid for by the state.

Secondly, as we discussed in the last section, research carried out in universities isn’t fully funded by the government, but in effect is cross-subsidised by other activities, especially teaching overseas students. It’s difficult to precisely quantify this additional contribution to university-based research, but it’s likely to be of order an additional £1 billion across the whole HE sector.

4.2. Who should decide what science is done?

Science funding is about making choices and deciding priorities. Who, in principle, should be making these decisions?

(a) Scientists. One view is that it is only that scientists who are in a position to judge the quality of the work of other scientists, and to make informed choices about what science should be done. This view underlies the prevalence of “peer review” as a mechanism for judging the validity and quality of scientific publications, and the practical procedures by which science project proposals are judged and ranked by science funding agencies. Typically, a project proposal will be sent to referees from the science community, who will make a critique of the proposal, and a panel will rank a set of proposals by reference to these referees’ reports.

From a practical point of view, the argument is that it is only expert, practising scientists who are in a position to assess the novelty of a proposal in the context of the existing body of scientific knowledge, and who can make a judgement of a proposal’s technical feasibility. The potential counterarguments are that reliance on the judgement of other scientists promotes conservative, consensus-driven research rather than projects with truly transformative potential, and disadvantages cross-disciplinary research, because of the difficulty of finding potential referees whose expertise ranges across more than one area.

This view was given an ideological framework by Michael Polanyi, who compared the international scientific enterprise at its best as a “market-place of ideas” in which the best and most profound ideas would naturally prevail. In this view any attempt by non-scientists to steer this “independent republic of science” is likely to be counterproductive and destructive. This point of view is popular with elite scientists.

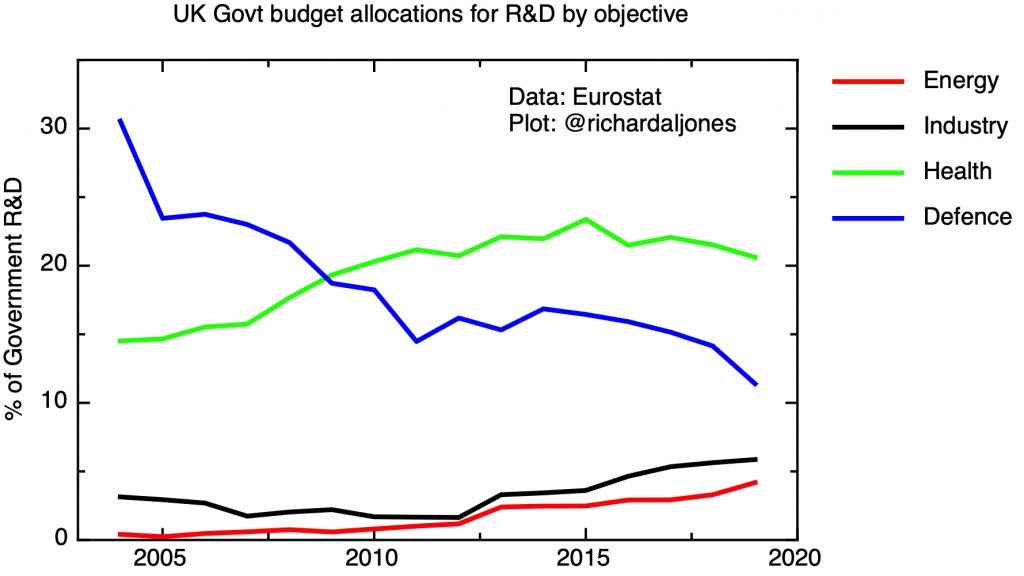

(b) The Government. On the other hand, if the reason the government funds science is because it believes this supports its strategic objectives, then one can argue that the government should direct science in ways that support those objectives. In fact, over the last century and a half, this is exactly what has happened for most government supported science. The most pressing strategic goal behind which the government has directed science has always been military power; behind that at various times support for agriculture, for colonial activities, and for civil industry has also been prominent.

(c) The people. In a democratic society, the government’s support for science should reflect widely held societal priorities. There’s an argument that representative democracy doesn’t provide a very effective way of translating those societal priorities into decisions on science funding, simply because so many other issues – health, crime, the economy etc – are likely to be much more salient in influencing citizens’ votes. This makes the case for giving more direct forms of deliberative democracy – citizens’ assemblies and such like – a role in setting science priorities.

Often this has been framed in a defensive way, to head off potential public opposition to controversial new technologies. But there is case for thinking of the direct involvement of citizens in setting priorities in a more positive way, challenging expert group-think and bringing new perspectives to set a direction that commands widespread public support.

(d) The market. According to many economists, if you want to find out what people want, you should look at what they do, not what they say. In this view, the true test of whether people want some innovation is whether they buy it. Following Hayek, one can regard the market as the most efficient way of aggregating information about societal wants and needs. In this view, the government should simply step out of the way, and let private firms explore the space of possible innovations, with the market deciding which are successful and which not.

The difficulty with this view is that many radical innovations need large investments to get to the point at which they can be brought to market, with no certainty not just as to whether demand for them materialises, but as to whether they will work in the first place.

There’s a more general point here; what works for applied research, with a clear and relatively short route to commercialisation, is likely to be less useful for more basic research, where any applications are highly uncertain, unpredictable and often don’t manifest themselves for many years after an initial discovery.

4.3 What kind of science policy choices are we talking about?

In thinking about who makes decisions about what kind of science gets done, and who influences those priorities, we should distinguish between some different levels of decision-making.

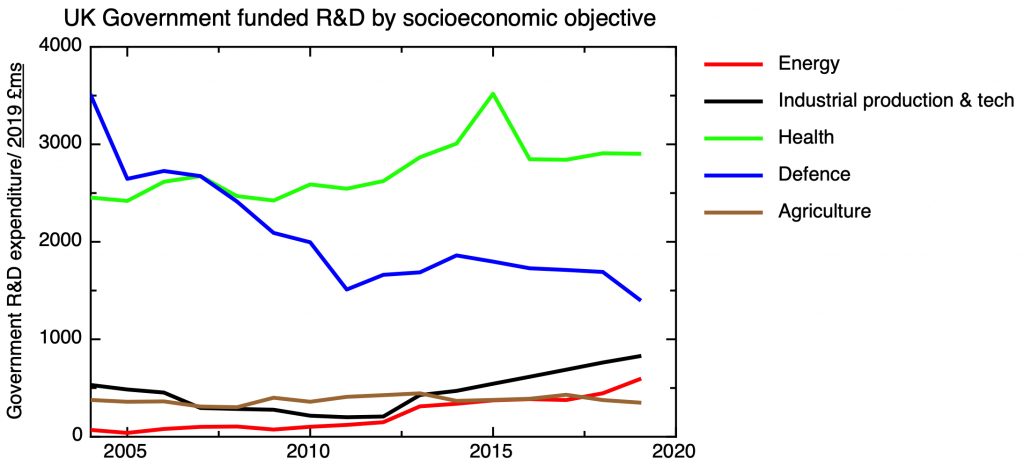

We’ve seen major strategic shifts – for example the shift from applied research to “curiosity driven” research in the late Thatcher government. In effect this involved shifts of many £billions, and was driven from the centre of government, under the influence of a single powerful advisor (George Guise, in that case). The post-cold war shift of emphasis from defence to health and life sciences was on a similar scale, though this is probably more difficult to pin on a single individual or agency.

On a slightly smaller scale, we have long-term strategic programmes, with funding at the level of £100m’s. Examples of this could include the fusion programme, recent initiatives on batteries and quantum technology, and the new funding agency ARIA. Here the initiative usually does come from some part of central government, with Government Chief Scientific Advisors and other influential policy actors (for example, in the recent case of ARIA, Dominic Cummings) often being a driving force.

Programmes at the level of £10m’s have generally been initiated by research councils, though they may form part of the research councils’ pitch to government in the budget negotiations around spending reviews. Priorities at this level may emerge from the scientific community through the various advisory bodies that research councils draw on; there may also be an element of research councils anticipating what they think the government of the day is interested in, whether that is driven by formal government strategy documents or more informal interactions with key actors.

Within these programmes, it is the individual projects that are awarded to researchers that are awarded through peer review.

4.4 The “Haldane principle” and the political independence of science funding agencies

How closely should politicians be able to direct research priorities for government funded science? The conflict between the long-term nature of science and the short-term imperatives of electoral politics has long been recognised, and makes the case for inserting some distance between science funding agencies and central government. It’s not just in science that this conflict between the long term interests of the state and short term electoral politics is recognised; the decision to give the Bank of England the power to set interest rates independently of the Treasury presents an analogy. In the UK the symbol of this distance in science policy is a semi-mythical arrangement known as the “Haldane principle”.

The Haldane principle is interpreted in different, often self-serving, ways by different constituencies. Some scientists interpret it as meaning that the government should have no involvement in any aspect of science policy, apart from signing the cheques. For government ministers, on the other hand, it legitimates their right to make big funding announcements while leaving operational details to others. The historian David Edgerton has stressed (see e.g. The Haldane Principle and other invented traditions in science policy) its relatively recent rise to prominence in science policy discourse, and the fact that most government funded science has never been within its orbit.

The origins of the “Haldane principle” are purported to lie in an important and influential report from Lord Richard Haldane published in 1918 . This defined many of the principles by which the modern civil service is run, including principles for both the way state-funded science should be administered and the way scientific evidence should be used in government.

The principle that the government should be involved in science had been established in the late 19th Century, through reports such as that of the Royal Commission on Scientific Instruction in 1870, and the establishment of institutions such as the National Physical Laboratory and the Laboratory of the Government Chemist. The First World War brought new urgency to government driven science, both to meet the technological demands of the new industrial warfare, and to accommodate the medical demands of dealing with its terrible human cost.

This was the context of the Haldane review, which brought attention to the slightly ad-hoc way in which different government departments had ended up supporting scientific research in support of their various goals. The report focused on two new bodies that had arisen to deal with these pressures; the Medical Research Committee and the Department of Scientific and Industrial Research. In each case a pattern had been established – a minister taking responsibility, but with decision making devolved to a committee of experts, taking advice from a wider advisory council. This did set the pattern for something like a modern research council, and indeed the Medical Research Committee morphed into the Medical Research Council, which survived until its incorporation into UKRI in 2018. Other research councils – first the Agricultural Research Council, followed. But the focus of the Haldane recommendations was on the best way to bring expert advice to bear onto the problems of government, rather than any principle of scientific autonomy.

As David Edgerton has stressed, in the postwar period, the research councils were relatively small parts of an overall R&D system dominated by the requirements of the “Warfare State”. One major innovation was the introduction of the Science Research Council in 1965, which first evolved into the Science and Engineering Research Council, and then was broken up following William Waldegrave’s 1993 White Paper. This co-incided with the big shift in UK policy I’ve referred to before, where the state substantially withdrew from applied research. The 1993 White Paper did invoke a “Haldane principle”, but reasserted a right for the government to make strategic choices: “Day-to-day decisions on the scientific merits of different strategies, programmes and projects should be taken by the Research Councils, without Government involvement. There is, however, a preceding level of broad priority-setting between general classes of activity where a range of criteria must be brought to bear.”

The government asserted much more direct control over the research system in the 2017 Higher Education and Research Act, which incorporated all seven research councils into a single organisation, UK Research and Innovation (UKRI). The act does give a nod to a “Haldane principle”, which it defines in a rather diluted form: “The ‘Haldane principle’ is the principle that decisions on individual research proposals are best taken following an evaluation of the quality and likely impact of the proposals (such as a peer review process).”

However, the act makes explicit where it thinks power should lie. Section 102 of the Act states, “The Secretary of State may give UKRI directions about the allocation or expenditure by UKRI of grants received…”, and, in case the situation isn’t already clear enough, “UKRI must comply with any directions given under this section.”

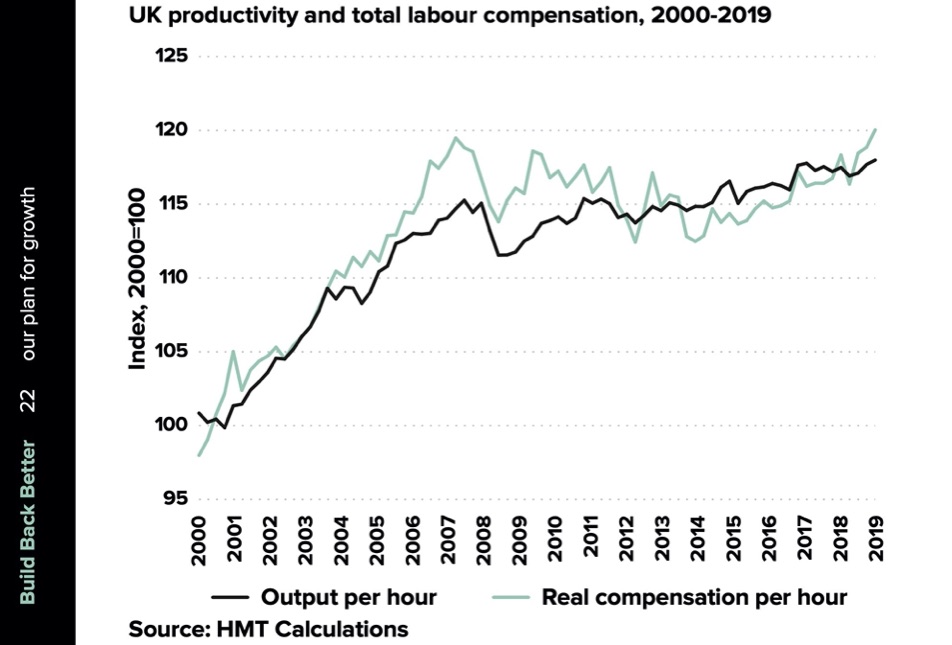

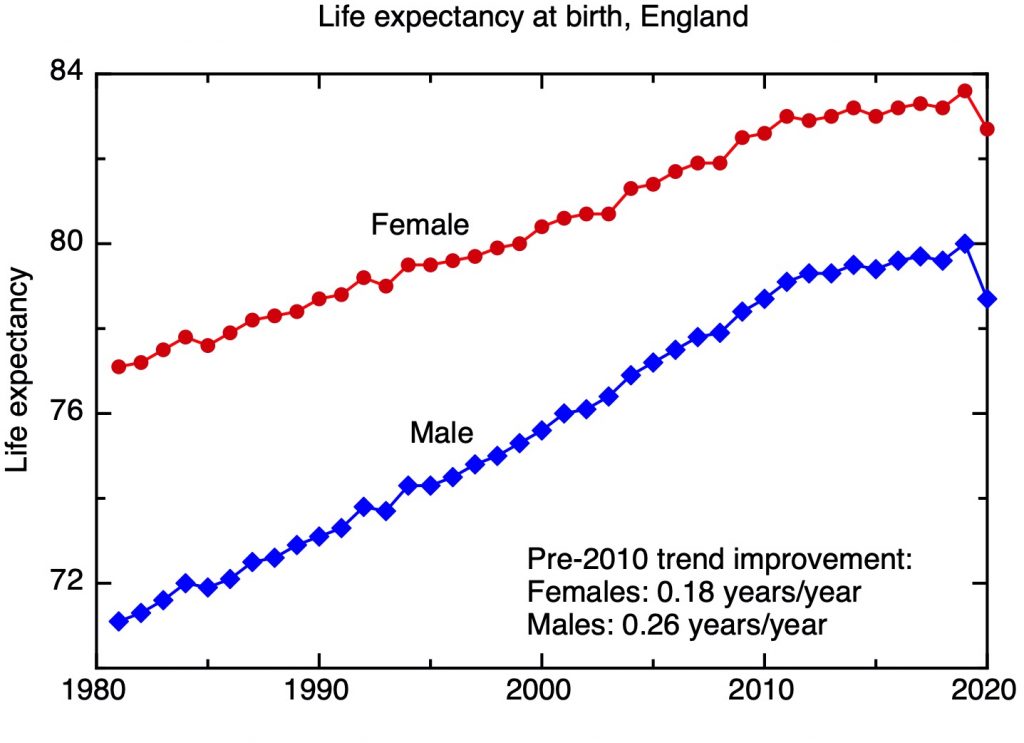

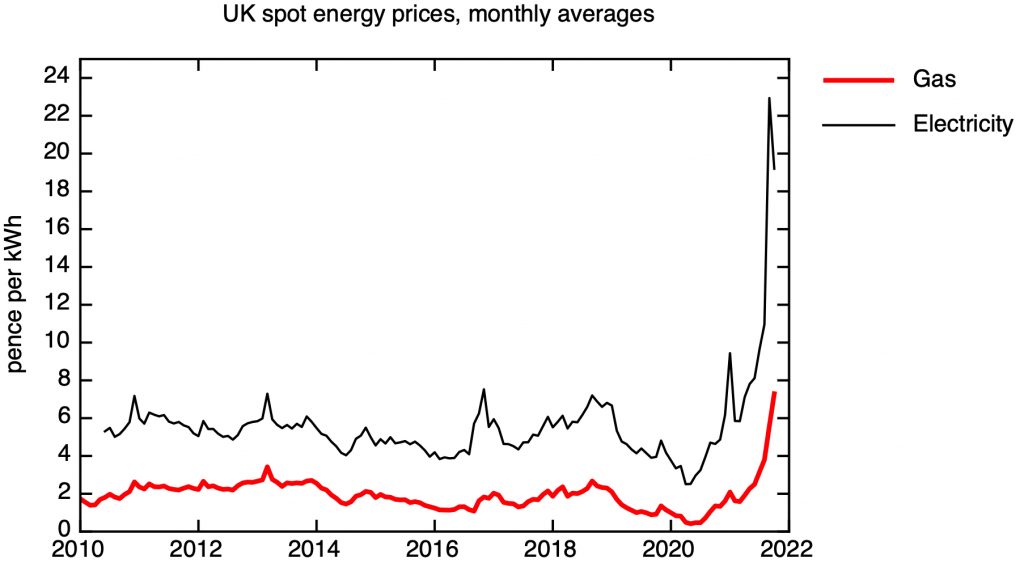

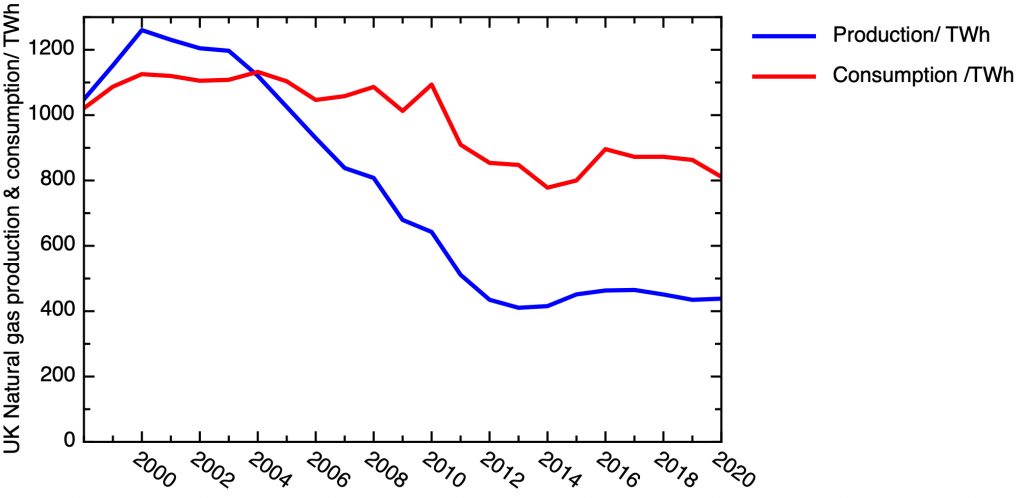

My own view is that the government does have a right – indeed, a duty – to steer the overall science enterprise in support of the strategic goals of the state. I discussed some of those big issues in the first section of this series – the need to return to productivity growth, to manage the energy transition to net zero, to keep the nation secure in a hostile world, to support the health and well-being of its citizens. The government has given itself the power to do this.

The danger, though, is that nobody does the strategy, but that instead governments succumb to the temptation of micromanaging the implementation for short-term political advantage.

To come in the next instalment: on the bodies that fund science in the UK – UKRI, the research councils, Horizon Europe (and whatever may replace it). How well do they work, what challenges do they face?